We will now begin to consider the Universe as a

whole. Individual objects such as galaxies and stars will no longer

be the subject of discussion, but instead we will turn our

attention to the space and time in which these objects are

embedded. These considerations will then lead to a world model, the

model of our cosmos. We need such a model also to interpret the

observations of distant objects, i.e., those with a redshift for

which the local Hubble law (1.2) ceases to be valid.

This chapter will deal with aspects of

homogeneous cosmology. As we will see, the Universe can, to first

approximation, be considered as being homogeneous. At first sight

this fact obviously seems to contradict observations because the

world around us is highly inhomogeneous and structured. Thus the

assumption of homogeneity is certainly not valid on small scales.

But observations are compatible with the assumption that the

Universe is homogeneous when averaged over large spatial scales.

Aspects of inhomogeneous cosmology, and thus the formation and

evolution of structures in the Universe, will be considered later

in Chap. 7.

4.1 Introduction and fundamental observations

Cosmology is a very special science indeed. To be

able to appreciate its peculiar role we should recall the typical

way of establishing knowledge in natural sciences. It normally

starts with the observation of some regular patterns, for instance

the observation that the height h a stone falls through is related

quadratically to the time t

it takes to fall,  . This relation is then also found

for other objects and observed at different places on Earth.

Therefore, this relation is formulated as the ‘law’ of free fall.

The constant of proportionality g∕2 in this law is always the same.

This law of physics is tested by its prediction of how an object

falls, and wherever this prediction is tested it is

confirmed—disregarding the resistance of air in this simple

example, of course.

. This relation is then also found

for other objects and observed at different places on Earth.

Therefore, this relation is formulated as the ‘law’ of free fall.

The constant of proportionality g∕2 in this law is always the same.

This law of physics is tested by its prediction of how an object

falls, and wherever this prediction is tested it is

confirmed—disregarding the resistance of air in this simple

example, of course.

. This relation is then also found

for other objects and observed at different places on Earth.

Therefore, this relation is formulated as the ‘law’ of free fall.

The constant of proportionality g∕2 in this law is always the same.

This law of physics is tested by its prediction of how an object

falls, and wherever this prediction is tested it is

confirmed—disregarding the resistance of air in this simple

example, of course.

. This relation is then also found

for other objects and observed at different places on Earth.

Therefore, this relation is formulated as the ‘law’ of free fall.

The constant of proportionality g∕2 in this law is always the same.

This law of physics is tested by its prediction of how an object

falls, and wherever this prediction is tested it is

confirmed—disregarding the resistance of air in this simple

example, of course.Relations become physical laws if the predictions

they make are confirmed again and again; the validity of such a law

is considered more secure the more diverse the tests have been. The

law of free fall was tested only on the surface of the Earth and it

is only valid there with this constant of proportionality.1 In contrast to this,

Newton’s law of gravity contains the law of free fall as a special

case, but it also describes the free fall on the surface of the

Moon, and the motion of planets around the Sun. If only a single

stone was available, we would not know whether the law of free fall

is a property of this particular stone or whether it is valid more

generally.

In some ways, cosmology corresponds to the latter

example: we have only one single Universe available for

observation. Relations that are found in our cosmos cannot be

verified in other universes. Thus it is not possible to consider

any property of our Universe as ‘typical’—we have no statistics on

which we could base a statement like this. Despite this special

situation, enormous progress has been made in understanding our

Universe, as we will describe here and in subsequent

chapters.

Cosmological observations are difficult in

general, simply because the majority of the Universe (and with it

most of the sources it contains) is very far away from us. Distant

sources are very dim. This explains why our knowledge of the

Universe runs in parallel with the development of large telescopes

and sensitive detectors. Much of today’s knowledge of the distant

Universe became available only with the new generation of optical

telescopes of the 8-m class, as well as new and powerful telescopes

in other wavelength regimes.

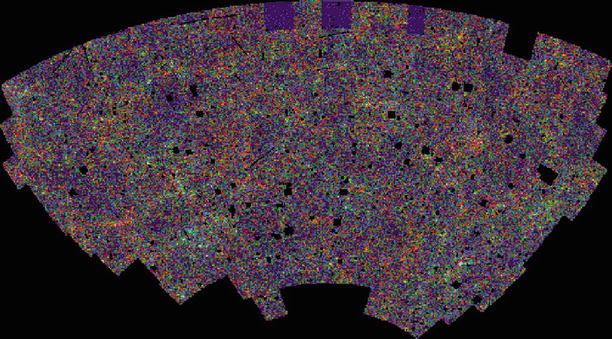

Fig. 4.1

The APM-survey: galaxy distribution in

a ∼ 100 × 50 degree2 field around the South Galactic

Pole. The intensities of the pixels are scaled with the number of

galaxies per pixel, i.e., the projected galaxy number density on

the sphere. The ‘holes’ are regions around bright stars, globular

clusters etc., that were not surveyed. Credit: S. Maddox,

W. Sutherland, G. Efstathiou & J. Loveday, with follow-up by

G. Dalton, and Astrophysics Dept., Oxford University

The most important aspect of cosmological

observations is the finite speed of light. We observe a source at

distance D in an

evolutionary state at which it was  younger than today. Thus we can

observe the current state of the Universe only very locally.

Another consequence of this effect, however, is of even greater

importance: due to the finite speed of light, it is possible to

look back into the past. At a distance of ten billion light years

we observe galaxies in an evolutionary state when the Universe had

only a third of its current age. Although we cannot observe the

past of our own Milky Way, we can study that of other galaxies. If

we are able to identify among them the ones that will form objects

similar to our Galaxy in the course of cosmic evolution, we will be

able to learn a great deal about the typical evolutionary history

of such spirals.

younger than today. Thus we can

observe the current state of the Universe only very locally.

Another consequence of this effect, however, is of even greater

importance: due to the finite speed of light, it is possible to

look back into the past. At a distance of ten billion light years

we observe galaxies in an evolutionary state when the Universe had

only a third of its current age. Although we cannot observe the

past of our own Milky Way, we can study that of other galaxies. If

we are able to identify among them the ones that will form objects

similar to our Galaxy in the course of cosmic evolution, we will be

able to learn a great deal about the typical evolutionary history

of such spirals.

younger than today. Thus we can

observe the current state of the Universe only very locally.

Another consequence of this effect, however, is of even greater

importance: due to the finite speed of light, it is possible to

look back into the past. At a distance of ten billion light years

we observe galaxies in an evolutionary state when the Universe had

only a third of its current age. Although we cannot observe the

past of our own Milky Way, we can study that of other galaxies. If

we are able to identify among them the ones that will form objects

similar to our Galaxy in the course of cosmic evolution, we will be

able to learn a great deal about the typical evolutionary history

of such spirals.

younger than today. Thus we can

observe the current state of the Universe only very locally.

Another consequence of this effect, however, is of even greater

importance: due to the finite speed of light, it is possible to

look back into the past. At a distance of ten billion light years

we observe galaxies in an evolutionary state when the Universe had

only a third of its current age. Although we cannot observe the

past of our own Milky Way, we can study that of other galaxies. If

we are able to identify among them the ones that will form objects

similar to our Galaxy in the course of cosmic evolution, we will be

able to learn a great deal about the typical evolutionary history

of such spirals.The finite speed of light in a Euclidean space,

in which we are located at the origin  today (t = t 0), implies that we can

only observe points in spacetime for which

today (t = t 0), implies that we can

only observe points in spacetime for which  ;

an arbitrary point

;

an arbitrary point  in spacetime is not observable.

The set of points in spacetime which satisfy the relation

in spacetime is not observable.

The set of points in spacetime which satisfy the relation

is called our backward light

cone.

is called our backward light

cone.

today (t = t 0), implies that we can

only observe points in spacetime for which

today (t = t 0), implies that we can

only observe points in spacetime for which  ;

an arbitrary point

;

an arbitrary point  in spacetime is not observable.

The set of points in spacetime which satisfy the relation

in spacetime is not observable.

The set of points in spacetime which satisfy the relation

is called our backward light

cone.

is called our backward light

cone.The fact that our astronomical observations are

restricted to sources which are located on our backward light cone

implies that our possibilities to observe the Universe are

fundamentally limited. If somewhere in spacetime there would be a

highly unusual event, we will not be able to observe it unless it

happens to lie on our backward light cone. Only if the Universe has

an essentially ‘simple’ structure will we be able to understand it,

by combining astronomical observations with theoretical modeling.

Luckily, our Universe seems to be basically simple in this

sense.

4.1.1 Fundamental cosmological observations

We will begin with a short list of key

observations that have proven to be of particular importance for

cosmology. Using these observational facts we will then be able to

draw a number of immediate conclusions; other observations will be

explained later in the context of a cosmological model.

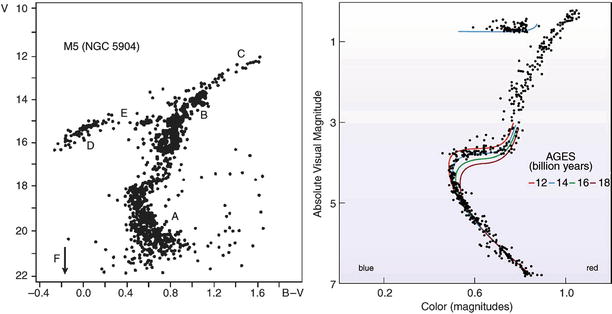

Fig. 4.2

Left

panel: Color-magnitude diagram of the globular cluster M 5.

The different sections in this diagram are labeled. A: main

sequence; B: red giant branch; C: point of helium flash; D:

horizontal branch; E: Schwarzschild-gap in the horizontal branch;

F: white dwarfs, below the arrow. At the point where the main

sequence turns over to the red giant branch (called the ‘turn-off

point’), stars have a mass corresponding to a main-sequence

lifetime which is equal to the age of the globular cluster (see

Appendix B.3). Therefore, the age of the cluster can be determined

from the position of the turn-off point by comparing it with models

of stellar evolution. Right

panel: Isochrones, i.e., curves connecting the stellar

evolutionary position in the color-magnitude diagram of stars of

equal age, are plotted for different ages and compared to the stars

of the globular cluster 47 Tucanae. Such analyses reveal that the

oldest globular clusters in our Milky Way are about 12 billion

years old, where different authors obtain slightly differing

results—details of stellar evolution may play a role here. The age

thus obtained also depends on the distance of the cluster. A

revision of these distances by the Hipparcos satellite led to a

decrease of the estimated ages by about two billion years. Credit:

M5: ©Leos Ondra; 47 Tuc: J.E. Hesser, W.E. Harris, D.A. Vandenberg,

J.W.B. Allwright, P. Scott & P.B. Stetson 1987, A CCD color-magnitude study of 47

Tucanae, PASP 99, 739

1.

The sky is dark at night (Olbers’ paradox).

2.

Averaged over large angular scales, faint

galaxies (e.g., those with R > 20) are uniformly distributed on

the sky (see Fig. 4.1).

3.

With the exception of a very few very nearby

galaxies (e.g., Andromeda = M31), a redshift is observed in the

spectra of galaxies—most galaxies are moving away from us, and

their escape velocity increases linearly with distance (Hubble law;

see Fig. 1.13).

4.

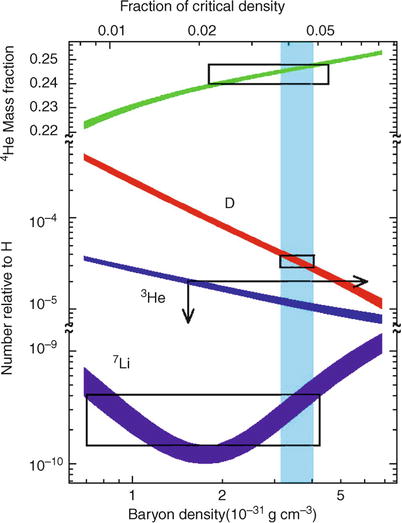

In nearly all cosmic objects (e.g., gas nebulae,

main sequence stars), the mass fraction of helium is 25–30 %.

6.

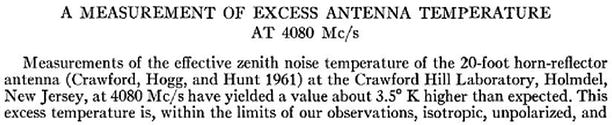

A microwave radiation (cosmic microwave

background radiation, CMB) is observed, reaching us from all

directions. This radiation is isotropic except for very small, but

immensely important, fluctuations with relative

amplitude ∼ 10−5 (see Fig. 1.21).

7.

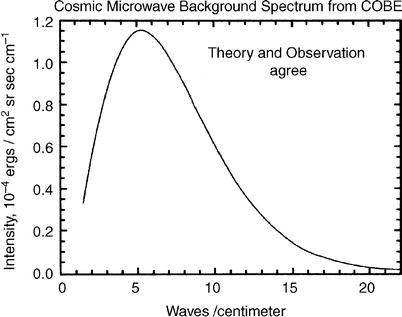

The spectrum of the CMB corresponds, within the

very small error bars that were obtained by the measurements with

COBE, to that of a perfect blackbody, i.e., a Planck radiation of a

temperature of T

0 = 2. 728 ± 0. 004 K—see Fig. 4.3.

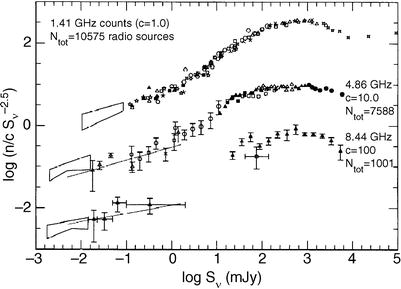

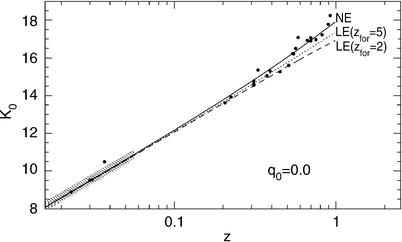

8.

The number counts of radio sources at high

Galactic latitude does not

follow the simple law  (see Fig. 4.4).

(see Fig. 4.4).

(see Fig. 4.4).

(see Fig. 4.4).

Fig. 4.3

CMB spectrum, plotted as intensity vs.

frequency, measured in waves per centimeter. The solid line shows the expected spectrum

of a blackbody of temperature T = 2. 728 K. The error bars of the

data, observed by the FIRAS instrument on-board COBE, are so small

that the data points with error bars cannot be distinguished from

the theoretical curve. Credit: COBE, NASA. We acknowledge the use

of the Legacy Archive for Microwave Data Analysis (LAMBDA). Support

for LAMBDA is provided by the NASA Office for Space Science

Fig. 4.4

Number counts of radio sources as a

function of their flux, normalized by the Euclidean expectation

, corresponding to the

integrated counts

, corresponding to the

integrated counts  . Counts are displayed

for three different frequencies; they clearly deviate from the

Euclidean expectation. Source: R.A. Windhorst et al. 1993,

Microjansky source counts and

spectral indices at 8.44 GHz, ApJ 405, 498, p. 508, Fig. 3.

©AAS. Reproduced with permission

. Counts are displayed

for three different frequencies; they clearly deviate from the

Euclidean expectation. Source: R.A. Windhorst et al. 1993,

Microjansky source counts and

spectral indices at 8.44 GHz, ApJ 405, 498, p. 508, Fig. 3.

©AAS. Reproduced with permission

, corresponding to the

integrated counts

, corresponding to the

integrated counts  . Counts are displayed

for three different frequencies; they clearly deviate from the

Euclidean expectation. Source: R.A. Windhorst et al. 1993,

Microjansky source counts and

spectral indices at 8.44 GHz, ApJ 405, 498, p. 508, Fig. 3.

©AAS. Reproduced with permission

. Counts are displayed

for three different frequencies; they clearly deviate from the

Euclidean expectation. Source: R.A. Windhorst et al. 1993,

Microjansky source counts and

spectral indices at 8.44 GHz, ApJ 405, 498, p. 508, Fig. 3.

©AAS. Reproduced with permission4.1.2 Simple conclusions

We will next draw a number of simple conclusions

from the observational facts listed above. These will then serve as

a motivation and guideline for developing the cosmological model.

We will start with the assumption of an infinite, on-average

homogeneous, Euclidean, static universe, and show that this

assumption is in direct contradiction to observations (1) and

(8).

Olbers’ paradox

(1): We can show that the night sky would be bright in such

a universe—uncomfortably bright, in fact. Let n ∗ be the mean number

density of stars, constant in space and time according to the

assumptions, and let R

∗ be their mean radius. A spherical shell of radius

r and thickness

dr around us contains

stars. Each of these stars subtends a solid angle of π R ∗

2∕r 2

on our sky, so the stars in the shell cover a total solid angle of

stars. Each of these stars subtends a solid angle of π R ∗

2∕r 2

on our sky, so the stars in the shell cover a total solid angle of

We see that this solid angle is independent of the radius

r of the spherical shell

because the solid angle covered by a single star

We see that this solid angle is independent of the radius

r of the spherical shell

because the solid angle covered by a single star  just compensates the volume of the

shell ∝ r 2. To

compute the total solid angle of all stars in a static Euclidean

universe, (4.1) has to be integrated over all distances

r, but the integral

just compensates the volume of the

shell ∝ r 2. To

compute the total solid angle of all stars in a static Euclidean

universe, (4.1) has to be integrated over all distances

r, but the integral

diverges. Formally, this means that the stars cover an infinite

solid angle, which of course makes no sense physically. The reason

for this divergence is that we disregarded the effect of

overlapping stellar disks on the sphere. However, these

considerations demonstrate that the sky would be completely filled

with stellar disks, i.e., from any direction, along any

line-of-sight, light from a stellar surface would reach us. Since

the specific intensity I

ν is independent

of distance—the surface brightness of the Sun as observed from

Earth is the same as seen by an observer who is much closer to the

Solar surface—the sky would have a temperature

of ∼ 104 K; fortunately, this is not the case!

diverges. Formally, this means that the stars cover an infinite

solid angle, which of course makes no sense physically. The reason

for this divergence is that we disregarded the effect of

overlapping stellar disks on the sphere. However, these

considerations demonstrate that the sky would be completely filled

with stellar disks, i.e., from any direction, along any

line-of-sight, light from a stellar surface would reach us. Since

the specific intensity I

ν is independent

of distance—the surface brightness of the Sun as observed from

Earth is the same as seen by an observer who is much closer to the

Solar surface—the sky would have a temperature

of ∼ 104 K; fortunately, this is not the case!

stars. Each of these stars subtends a solid angle of π R ∗

2∕r 2

on our sky, so the stars in the shell cover a total solid angle of

stars. Each of these stars subtends a solid angle of π R ∗

2∕r 2

on our sky, so the stars in the shell cover a total solid angle of

(4.1)

just compensates the volume of the

shell ∝ r 2. To

compute the total solid angle of all stars in a static Euclidean

universe, (4.1) has to be integrated over all distances

r, but the integral

just compensates the volume of the

shell ∝ r 2. To

compute the total solid angle of all stars in a static Euclidean

universe, (4.1) has to be integrated over all distances

r, but the integral

Source counts

(8): Consider now a population of sources with a luminosity

function that is constant in space and time, i.e., let n( > L) be the spatial number density of

sources with luminosity larger than L. A spherical shell of radius

r and thickness

dr around us contains

4π r

2 dr n( > L)

sources with luminosity larger than L. Because the observed flux

S is related to the

luminosity via L = 4π r 2 S, the number of sources with

flux > S in this

spherical shell is given as  ,

and the total number of sources with flux > S results from integration over the

radii of the spherical shells,

,

and the total number of sources with flux > S results from integration over the

radii of the spherical shells,

Changing the integration variable to L = 4π r 2 S, or

Changing the integration variable to L = 4π r 2 S, or  , with

, with  , yields

, yields

From this result we deduce that the source counts in such a

universe is

From this result we deduce that the source counts in such a

universe is  , independent of the

luminosity function. This is in contradiction to the

observations.

, independent of the

luminosity function. This is in contradiction to the

observations.

,

and the total number of sources with flux > S results from integration over the

radii of the spherical shells,

,

and the total number of sources with flux > S results from integration over the

radii of the spherical shells,

, with

, with  , yields

, yields

(4.2)

, independent of the

luminosity function. This is in contradiction to the

observations.

, independent of the

luminosity function. This is in contradiction to the

observations.From these two contradictions—Olbers’ paradox and

the non-Euclidean source counts—we conclude that at least one of

the assumptions must be wrong. Our Universe cannot be all four of

Euclidean, homogeneous, infinite, and static. The Hubble flow,

i.e., the redshift of galaxies, indicates that the assumption of a

static Universe is wrong.

The age of

globular clusters (5) requires that the Universe is at least

12 Gyr old because it cannot be younger than the oldest objects it

contains. Interestingly, the age estimates for globular clusters

yield values which are very close to the Hubble time  . This

similarity suggests that the Hubble expansion may be directly

linked to the evolution of the Universe.

. This

similarity suggests that the Hubble expansion may be directly

linked to the evolution of the Universe.

. This

similarity suggests that the Hubble expansion may be directly

linked to the evolution of the Universe.

. This

similarity suggests that the Hubble expansion may be directly

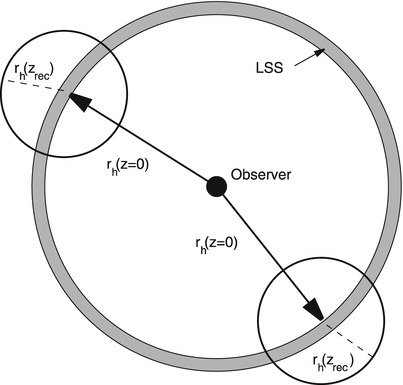

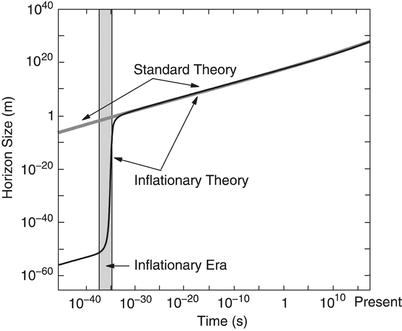

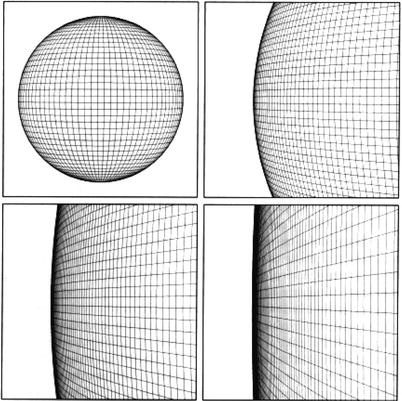

linked to the evolution of the Universe.The apparently isotropic distribution of galaxies (2), when

averaged over large scales, and the CMB isotropy (6) suggest that the

Universe around us is isotropic on large angular scales. Therefore

we will first consider a world model that describes the Universe

around us as isotropic. If we assume, in addition, that our place

in the cosmos is not privileged over any other place, then the

assumption of isotropy around us implies that the Universe appears

isotropic as seen from any other place. The homogeneity of the

Universe follows immediately from the isotropy around every

location, as explained in Fig. 4.5. The combined assumption of homogeneity and

isotropy of the Universe is also known as the cosmological principle. We will see

that a world model based on the cosmological principle in fact

provides an excellent description of numerous observational

facts.

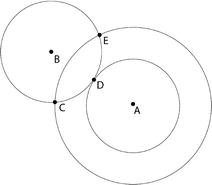

Fig. 4.5

Homogeneity follows from the isotropy

around two points. If the Universe is isotropic around observer B,

the densities at C, D, and E are equal. Drawing spheres of

different radii around observer A, it is seen that the region

within the spherical shell around A has to be homogeneous. By

varying the radius of the shell, we can conclude the whole Universe

must be homogeneous. Credit: J.A. Peacock 1999, Cosmological Physics, Cambridge

University Press

However, homogeneity is in principle unobservable

because observations of distant objects show those at an earlier

epoch. If the Universe evolves in time, as the aforementioned

observations suggest, evolutionary effects cannot directly be

separated from spatial variations.

The assumption of homogeneity of course breaks

down on small scales. We observe structures in the Universe, like

galaxies and clusters of galaxies, and even accumulations of

clusters of galaxies, so-called superclusters. Structures have been

found in redshift surveys that extend over ∼ 100 h −1 Mpc. However, we have

no indication of the existence of structures in the Universe with

scales  . This length-scale

can be compared to a characteristic length of the Universe, which

is obtained from the Hubble constant. If H 0 −1 specifies

the characteristic age of our Universe, then light will travel a

distance c∕H 0 in this time. With this,

we have obtained in problem 1.1 the Hubble radius as a characteristic

length-scale of the Universe (or more precisely, of the observable

Universe),

. This length-scale

can be compared to a characteristic length of the Universe, which

is obtained from the Hubble constant. If H 0 −1 specifies

the characteristic age of our Universe, then light will travel a

distance c∕H 0 in this time. With this,

we have obtained in problem 1.1 the Hubble radius as a characteristic

length-scale of the Universe (or more precisely, of the observable

Universe),

The Hubble volume ∼ R

H 3 can contain a very large number of

structures of size ∼ 100 h

−1 Mpc, so that it still makes sense to assume an

on-average homogeneous cosmological model. Superposed on this

homogeneous universe we then have density fluctuations that are

identified with the observed large-scale structures; these will be

discussed in detail in Chap. 7. To a first approximation we can

neglect these density perturbations in a description of the

Universe as a whole. We will therefore consider world models that

are based on the cosmological principle, i.e., in which the

universe looks the same for all observers (or, in other words, if

observed from any point).

The Hubble volume ∼ R

H 3 can contain a very large number of

structures of size ∼ 100 h

−1 Mpc, so that it still makes sense to assume an

on-average homogeneous cosmological model. Superposed on this

homogeneous universe we then have density fluctuations that are

identified with the observed large-scale structures; these will be

discussed in detail in Chap. 7. To a first approximation we can

neglect these density perturbations in a description of the

Universe as a whole. We will therefore consider world models that

are based on the cosmological principle, i.e., in which the

universe looks the same for all observers (or, in other words, if

observed from any point).

. This length-scale

can be compared to a characteristic length of the Universe, which

is obtained from the Hubble constant. If H 0 −1 specifies

the characteristic age of our Universe, then light will travel a

distance c∕H 0 in this time. With this,

we have obtained in problem 1.1 the Hubble radius as a characteristic

length-scale of the Universe (or more precisely, of the observable

Universe),

. This length-scale

can be compared to a characteristic length of the Universe, which

is obtained from the Hubble constant. If H 0 −1 specifies

the characteristic age of our Universe, then light will travel a

distance c∕H 0 in this time. With this,

we have obtained in problem 1.1 the Hubble radius as a characteristic

length-scale of the Universe (or more precisely, of the observable

Universe),

(4.3)

Homogeneous and isotropic world models are the

simplest cosmological solutions of the equations of General

Relativity (GR). We will examine how far such simple models are

compatible with observations. As we shall see, the application of

the cosmological principle results in the observational facts which

were mentioned in Sect. 4.1.1.

4.2 An expanding universe

Gravitation is the fundamental force in the

Universe. Only gravitational forces and electromagnetic forces can

act over large distance. Since cosmic matter is electrically

neutral on average, electromagnetic forces do not play any

significant role on large scales, so that gravity has to be

considered as the driving force in cosmology. The laws of gravity

are described by the theory of General Relativity, formulated by A.

Einstein in 1915. It contains Newton’s theory of gravitation as a

special case for weak gravitational fields and small spatial

scales. Newton’s theory of gravitation has been proven to be

eminently successful, e.g., in describing the motion of planets.

Thus it is tempting to try to design a cosmological model based on

Newtonian gravity. We will proceed to do that as a first step

because not only is this Newtonian cosmology very useful from a

didactic point of view, but one can also argue why the Newtonian

cosmos correctly describes the major aspects of a relativistic

cosmology.

4.2.1 Newtonian cosmology

The description of a gravitational system

necessitates the application of GR if the length-scales in the

system are comparable to the radius of curvature of spacetime; this

is certainly the case in our Universe. Even if we cannot explain at

this point what exactly the ‘curvature radius of the Universe’ is,

it should be plausible that it is of the same order of magnitude as

the Hubble radius R

H. We will discuss this more thoroughly further below.

Despite this fact, one can expect that a Newtonian description is

essentially correct: in a homogeneous universe, any small spatial

region is characteristic for the whole universe. If the evolution

of a small region in space is known, we also know the history of

the whole universe, due to homogeneity. However, on small scales,

the Newtonian approach is justified. We will therefore, based on

the cosmological principle, first consider spatially homogeneous

and isotropic world models in the framework of Newtonian

gravity.

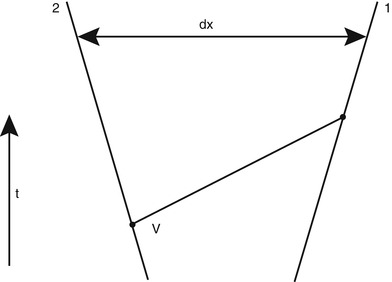

4.2.2 Kinematics of the Universe

Comoving

coordinates. We consider a homogeneous sphere which may be

radially expanding (or contracting); however, we require that the

density ρ(t) remains spatially homogeneous. The

density may vary in time due to expansion or contraction. We choose

a point t = t 0 in time and introduce a

coordinate system  at this instant with the origin

coinciding with the center of the sphere. A particle in the sphere

which is located at position

at this instant with the origin

coinciding with the center of the sphere. A particle in the sphere

which is located at position  at time t 0 will be located at some

other time t at the

position

at time t 0 will be located at some

other time t at the

position  which results from the expansion

of the sphere. Since the expansion is radial or, in other words,

the velocity vector of a particle at position

which results from the expansion

of the sphere. Since the expansion is radial or, in other words,

the velocity vector of a particle at position  is parallel to

is parallel to  , the direction of

, the direction of  is constant. Because

is constant. Because

, this means

that

, this means

that

Since

Since  and

and  both have the dimension of a

length, the function a(t) is dimensionless; it can depend only

on time. Although requiring radial expansion alone could make

a depend on

both have the dimension of a

length, the function a(t) is dimensionless; it can depend only

on time. Although requiring radial expansion alone could make

a depend on  as well, the

requirement that the density remains homogeneous implies that

a must be spatially

constant. The function a(t) is called the cosmic scale factor ; due to

as well, the

requirement that the density remains homogeneous implies that

a must be spatially

constant. The function a(t) is called the cosmic scale factor ; due to

, it obeys

, it obeys

The value of t 0

is arbitrary; we choose t

0 = today. Particles (or observers) which move according

to (4.4)

are called comoving particles

(observers), and

The value of t 0

is arbitrary; we choose t

0 = today. Particles (or observers) which move according

to (4.4)

are called comoving particles

(observers), and  is the comoving coordinate. The

world line

is the comoving coordinate. The

world line  of a comoving observer is

unambiguously determined by

of a comoving observer is

unambiguously determined by  ,

, ![$$({\boldsymbol r},t) = [a(t){\boldsymbol x},t]$$](A129044_2_En_4_Chapter_IEq32.gif) .

.

at this instant with the origin

coinciding with the center of the sphere. A particle in the sphere

which is located at position

at this instant with the origin

coinciding with the center of the sphere. A particle in the sphere

which is located at position  at time t 0 will be located at some

other time t at the

position

at time t 0 will be located at some

other time t at the

position  which results from the expansion

of the sphere. Since the expansion is radial or, in other words,

the velocity vector of a particle at position

which results from the expansion

of the sphere. Since the expansion is radial or, in other words,

the velocity vector of a particle at position  is parallel to

is parallel to  , the direction of

, the direction of  is constant. Because

is constant. Because

, this means

that

, this means

that

(4.4)

and

and  both have the dimension of a

length, the function a(t) is dimensionless; it can depend only

on time. Although requiring radial expansion alone could make

a depend on

both have the dimension of a

length, the function a(t) is dimensionless; it can depend only

on time. Although requiring radial expansion alone could make

a depend on  as well, the

requirement that the density remains homogeneous implies that

a must be spatially

constant. The function a(t) is called the cosmic scale factor ; due to

as well, the

requirement that the density remains homogeneous implies that

a must be spatially

constant. The function a(t) is called the cosmic scale factor ; due to

, it obeys

, it obeys

(4.5)

is the comoving coordinate. The

world line

is the comoving coordinate. The

world line  of a comoving observer is

unambiguously determined by

of a comoving observer is

unambiguously determined by  ,

, ![$$({\boldsymbol r},t) = [a(t){\boldsymbol x},t]$$](A129044_2_En_4_Chapter_IEq32.gif) .

.Expansion

rate. The velocity of such a comoving particle is obtained

from the time derivative of its position,

where in the last step we defined the expansion rate

where in the last step we defined the expansion rate

The choice of this notation is not accidental, since H is closely related to the Hubble

constant. To see this, we consider the relative velocity vector of

two comoving particles at positions

The choice of this notation is not accidental, since H is closely related to the Hubble

constant. To see this, we consider the relative velocity vector of

two comoving particles at positions  and

and  , which

follows directly from (4.6):

, which

follows directly from (4.6):

Hence, the relative velocity is proportional to the separation

vector, so that the relative velocity is purely radial.

Furthermore, the constant of proportionality H(t) depends only on time but not on the

position of the two particles. Obviously, (4.8) is very similar to

the Hubble law

Hence, the relative velocity is proportional to the separation

vector, so that the relative velocity is purely radial.

Furthermore, the constant of proportionality H(t) depends only on time but not on the

position of the two particles. Obviously, (4.8) is very similar to

the Hubble law

in which v is the radial

velocity of a source at distance D from us. Therefore, setting

t = t 0 and H 0 ≡ H(t 0), (4.8) is simply the Hubble

law, in other words, (4.8) is a generalization of (4.9) for arbitrary time.

It expresses the fact that any observer expanding with the sphere

will observe an isotropic velocity field that follows the Hubble

law. Since we are observing an expansion today—sources are moving

away from us—we have H

0 > 0, and

in which v is the radial

velocity of a source at distance D from us. Therefore, setting

t = t 0 and H 0 ≡ H(t 0), (4.8) is simply the Hubble

law, in other words, (4.8) is a generalization of (4.9) for arbitrary time.

It expresses the fact that any observer expanding with the sphere

will observe an isotropic velocity field that follows the Hubble

law. Since we are observing an expansion today—sources are moving

away from us—we have H

0 > 0, and  .

.

(4.6)

(4.7)

and

and  , which

follows directly from (4.6):

, which

follows directly from (4.6):

(4.8)

(4.9)

.

.The kinematics of comoving observers in an

expanding universe is analogous to that of raisins in a yeast

dough. Once in the oven, the dough expands, and accordingly the

positions of the raisins change. All raisins move away from all

other ones, and the mutual radial velocity is proportional to the

separation between any pair of raisins—i.e., their motion follows

the Hubble law (4.8), with an expansion rate H(t) which depends on the quality of the

yeast and the temperature of the oven. The spatial position of each

raisin at the time the oven is started uniquely identifies a

raisin, and can be taken as its comoving coordinate  , measured relative to the center of

the dough. The spatial position

, measured relative to the center of

the dough. The spatial position  at some later time t is then given by (4.4), where a(t) denotes the linear size of the dough

at time t relative to the

size when the oven was started.

at some later time t is then given by (4.4), where a(t) denotes the linear size of the dough

at time t relative to the

size when the oven was started.

, measured relative to the center of

the dough. The spatial position

, measured relative to the center of

the dough. The spatial position  at some later time t is then given by (4.4), where a(t) denotes the linear size of the dough

at time t relative to the

size when the oven was started.

at some later time t is then given by (4.4), where a(t) denotes the linear size of the dough

at time t relative to the

size when the oven was started.4.2.3 Dynamics of the expansion

The above discussion describes the kinematics of

the expansion. However, to obtain the behavior of the function

a(t) in time, and thus also the motion of

comoving observers and the time evolution of the density of the

sphere, it is necessary to consider the dynamics. The evolution of

the expansion rate is determined by self-gravity of the sphere,

from which it is expected that it will cause a deceleration of the

expansion.

Equation of

motion. We therefore consider a spherical surface of radius

x at time t 0 and, accordingly, a

radius r(t) = a(t) x at arbitrary time t. The mass M(x) enclosed in this comoving surface is

constant in time, and is given by

where ρ 0 must

be identified with the mass density of the universe today

(t = t 0). The density is a

function of time and, due to mass conservation, it is inversely

proportional to the volume of the sphere,

where ρ 0 must

be identified with the mass density of the universe today

(t = t 0). The density is a

function of time and, due to mass conservation, it is inversely

proportional to the volume of the sphere,

The gravitational acceleration of a particle on the spherical

surface is GM(x)∕r 2, directed towards the

center. This then yields the equation of motion of the particle,

The gravitational acceleration of a particle on the spherical

surface is GM(x)∕r 2, directed towards the

center. This then yields the equation of motion of the particle,

or, after substituting r(t) = x a(t), an equation for a ,

or, after substituting r(t) = x a(t), an equation for a ,

It is important to note that this equation of motion does not

dependent on x. The

dynamics of the expansion, described by a(t), is determined solely by the matter

density.

It is important to note that this equation of motion does not

dependent on x. The

dynamics of the expansion, described by a(t), is determined solely by the matter

density.

(4.10)

(4.11)

(4.12)

(4.13)

‘Conservation of

energy’. Another way to describe the dynamics of the

expanding shell is based on the law of energy conservation: the sum

of kinetic and potential energy is constant in time. This

conservation of energy is derived directly from (4.13). To do

this, (4.13) is multiplied by  ,

and the resulting equation can be integrated with respect to time

since

,

and the resulting equation can be integrated with respect to time

since  ,

and

,

and  :

:

here, Kc 2 is a

constant of integration that will be interpreted later. After

multiplication with x

2∕2, (4.14) can be written as

here, Kc 2 is a

constant of integration that will be interpreted later. After

multiplication with x

2∕2, (4.14) can be written as

which is interpreted such that the kinetic + potential energy (per

unit mass) of a particle is a constant on the spherical surface.

Thus (4.14) in fact describes the conservation of

energy. The latter equation also immediately suggests an

interpretation of the integration constant: K is proportional to the total energy

of a comoving particle, and thus the history of the expansion

depends on K. The sign of

K characterizes the

qualitative behavior of the cosmic expansion history.

which is interpreted such that the kinetic + potential energy (per

unit mass) of a particle is a constant on the spherical surface.

Thus (4.14) in fact describes the conservation of

energy. The latter equation also immediately suggests an

interpretation of the integration constant: K is proportional to the total energy

of a comoving particle, and thus the history of the expansion

depends on K. The sign of

K characterizes the

qualitative behavior of the cosmic expansion history.

,

and the resulting equation can be integrated with respect to time

since

,

and the resulting equation can be integrated with respect to time

since  ,

and

,

and  :

:

(4.14)

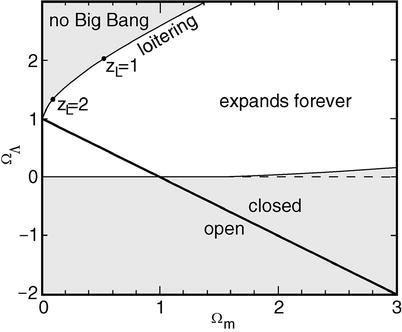

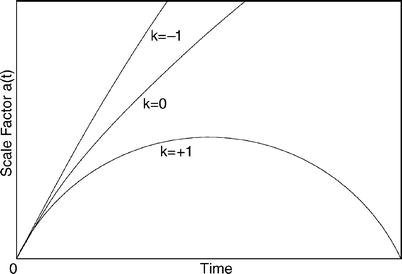

-

If K < 0, the right-hand side of (4.14) is always positive. Since da∕dt > 0 today, da∕dt remains positive for all times or, in other words, the universe will expand forever.

-

If K = 0, the right-hand side of (4.14) is always positive, i.e., da∕dt > 0 for all times, and the universe will also expand forever, but in a way that da∕dt → 0 for t → ∞—the asymptotic expansion velocity for t → ∞ is zero.

-

If K > 0, the right-hand side of (4.14) vanishes if

.

For this value of a,

.

For this value of a,

, and the expansion will

come to a halt. After that, the expansion will turn into a

contraction, and such a universe will re-collapse.

, and the expansion will

come to a halt. After that, the expansion will turn into a

contraction, and such a universe will re-collapse.

In the special case of K = 0, which separates eternally

expanding world models from those that will re-collapse in the

future, the universe has a current density called critical density which can be inferred

from (4.14) by setting t = t 0 and  :

:

Obviously, ρ cr

is a characteristic density of the current universe. As in many

situations in physics, it is useful to express physical quantities

in terms of dimensionless parameters, for instance the current

cosmological density. We therefore define the density parameter

Obviously, ρ cr

is a characteristic density of the current universe. As in many

situations in physics, it is useful to express physical quantities

in terms of dimensionless parameters, for instance the current

cosmological density. We therefore define the density parameter

where K > 0 corresponds

to Ω 0 > 1,

and K < 0 corresponds to

Ω 0 < 1.

Thus, Ω 0 is one

of the central cosmological parameters. Its accurate determination

was possible only quite recently, and we shall discuss this in

detail later. However, we should mention here that matter which is

visible as stars contributes only a small fraction to the density

of our Universe,

where K > 0 corresponds

to Ω 0 > 1,

and K < 0 corresponds to

Ω 0 < 1.

Thus, Ω 0 is one

of the central cosmological parameters. Its accurate determination

was possible only quite recently, and we shall discuss this in

detail later. However, we should mention here that matter which is

visible as stars contributes only a small fraction to the density

of our Universe,  . But, as we

already discussed in the context of rotation curves of spiral

galaxies and the mass determination of elliptical galaxies from the

gravitational lensing effect, we find clear indications of the

presence of dark matter which can in principle dominate the value

of Ω 0. We will

see that this is indeed the case.

. But, as we

already discussed in the context of rotation curves of spiral

galaxies and the mass determination of elliptical galaxies from the

gravitational lensing effect, we find clear indications of the

presence of dark matter which can in principle dominate the value

of Ω 0. We will

see that this is indeed the case.

:

:

(4.15)

(4.16)

. But, as we

already discussed in the context of rotation curves of spiral

galaxies and the mass determination of elliptical galaxies from the

gravitational lensing effect, we find clear indications of the

presence of dark matter which can in principle dominate the value

of Ω 0. We will

see that this is indeed the case.

. But, as we

already discussed in the context of rotation curves of spiral

galaxies and the mass determination of elliptical galaxies from the

gravitational lensing effect, we find clear indications of the

presence of dark matter which can in principle dominate the value

of Ω 0. We will

see that this is indeed the case.4.2.4 Modifications due to General Relativity

The Newtonian approach contains nearly all

essential aspects of homogeneous and isotropic world models,

otherwise we would not have discussed it in detail. Most of the

above equations are also valid in relativistic cosmology, although

the interpretation needs to be altered. In particular, the image of

an expanding sphere needs to be revised—this picture implies that a

‘center’ of the universe exists. Such a picture implicitly

contradicts the cosmological principle in which no point is singled

out over others—our Universe neither has a center, nor is it

expanding away from a privileged point. However, the image of a

sphere does not show up in any of the relevant equations:

(4.11) for

the evolution of the cosmological density and (4.13)

and (4.14) for the evolution of the scale factor

a(t) contain no quantities that refer to

a sphere.

General Relativity modifies the Newtonian model

in several respects:

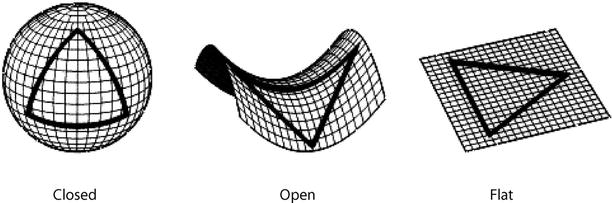

-

We know from the theory of Special Relativity that mass and energy are equivalent, according to Einstein’s famous relation E = m c 2. This implies that it is not only the matter density that contributes to the equations of motion. For example, a radiation field like the CMB has an energy density and, due to the equivalence above, this has to enter the expansion equations. We will see below that such a radiation field can be characterized as matter with pressure. The pressure will then explicitly appear in the equation of motion for a(t).

-

The field equation of GR as originally formulated by Einstein did not permit a solution which corresponds to a homogeneous, isotropic, and static cosmos. But since Einstein, like most of his contemporaries, believed the Universe to be static, he modified his field equations by introducing an additional term, the cosmological constant.

-

The interpretation of the expansion is changed completely: it is not the particles or the observers that are expanding away from each other, nor is the Universe an expanding sphere. Instead, it is space itself that expands. In particular, the redshift is no Doppler redshift, but is itself a property of expanding spacetimes. However, we may still visualize redshift locally as being due to the Doppler effect without making a substantial conceptual error.2

In the following, we will explain the first two

aspects in more detail.

First law of

thermodynamics. When air is compressed, for instance when

pumping up a tire, it heats up. The temperature increases and

accordingly so does the thermal energy of the air. In the language

of thermodynamics, this fact is described by the first law: the

change in internal energy dU through an (adiabatic) change in

volume dV equals the work

, where P is the pressure in the gas. From the

equations of GR as applied to a homogeneous isotropic cosmos, a

relation is derived which reads

, where P is the pressure in the gas. From the

equations of GR as applied to a homogeneous isotropic cosmos, a

relation is derived which reads

in full analogy to this law. Here, ρ c 2 is the energy density,

i.e., for ‘normal’ matter, ρ is the mass density, and P is the pressure of the matter. If we

now consider a constant comoving volume element V x , then its physical volume

V = a 3(t)V x will change due to expansion.

Thus,

in full analogy to this law. Here, ρ c 2 is the energy density,

i.e., for ‘normal’ matter, ρ is the mass density, and P is the pressure of the matter. If we

now consider a constant comoving volume element V x , then its physical volume

V = a 3(t)V x will change due to expansion.

Thus,  is the volume, and c 2 ρ a 3 the energy contained in

the volume, each divided by V x . Taken

together, (4.17) corresponds to the first law of

thermodynamics in an expanding universe.

is the volume, and c 2 ρ a 3 the energy contained in

the volume, each divided by V x . Taken

together, (4.17) corresponds to the first law of

thermodynamics in an expanding universe.

, where P is the pressure in the gas. From the

equations of GR as applied to a homogeneous isotropic cosmos, a

relation is derived which reads

, where P is the pressure in the gas. From the

equations of GR as applied to a homogeneous isotropic cosmos, a

relation is derived which reads

(4.17)

is the volume, and c 2 ρ a 3 the energy contained in

the volume, each divided by V x . Taken

together, (4.17) corresponds to the first law of

thermodynamics in an expanding universe.

is the volume, and c 2 ρ a 3 the energy contained in

the volume, each divided by V x . Taken

together, (4.17) corresponds to the first law of

thermodynamics in an expanding universe.The

Friedmann–Lemaître expansion equations. Next, we will

present equations for the scale factor a(t) which follow from GR for a

homogeneous isotropic universe. Afterwards, we will derive these

equations from the relations stated above—as we shall see, the

modifications by GR are in fact only minor, as expected from the

argument that a small section of a homogeneous universe

characterizes the cosmos as a whole. The field equations of GR

yield the equations of motion

and

and

where Λ is the

aforementioned cosmological constant introduced by

Einstein.3 Compared

to (4.13)

and (4.14), these two equations have been changed in

two places. First, the cosmological constant occurs in both

equations, and second, the equation of motion (4.19) now contains a

pressure term. The pair of (4.18) and (4.19) are called the

Friedmann equations.

where Λ is the

aforementioned cosmological constant introduced by

Einstein.3 Compared

to (4.13)

and (4.14), these two equations have been changed in

two places. First, the cosmological constant occurs in both

equations, and second, the equation of motion (4.19) now contains a

pressure term. The pair of (4.18) and (4.19) are called the

Friedmann equations.

(4.18)

(4.19)

The cosmological

constant. When Einstein introduced the Λ-term into his equations, he did this

solely for the purpose of obtaining a static solution for the

resulting expansion equations. We can easily see

that (4.18) and (4.19), without the

Λ-term, have no solution

for  . However, if the Λ-term is included, such a solution can

be found (which is irrelevant, however, as we now know that the

Universe is expanding). Einstein had no real physical

interpretation for this constant, and after the expansion of the

Universe was discovered he discarded it again. But with the genie

out of the bottle, the cosmological constant remained in the minds

of cosmologists, and their attitude towards Λ has changed frequently in the past 90

years. Around the turn of the millennium, observations were made

which strongly suggest a non-vanishing cosmological constant, and

the evidence has been further strengthened since, as will be

detailed in Chap. 8. Today we know that our Universe

has a non-zero cosmological constant, or at least something very

similar to it.

. However, if the Λ-term is included, such a solution can

be found (which is irrelevant, however, as we now know that the

Universe is expanding). Einstein had no real physical

interpretation for this constant, and after the expansion of the

Universe was discovered he discarded it again. But with the genie

out of the bottle, the cosmological constant remained in the minds

of cosmologists, and their attitude towards Λ has changed frequently in the past 90

years. Around the turn of the millennium, observations were made

which strongly suggest a non-vanishing cosmological constant, and

the evidence has been further strengthened since, as will be

detailed in Chap. 8. Today we know that our Universe

has a non-zero cosmological constant, or at least something very

similar to it.

. However, if the Λ-term is included, such a solution can

be found (which is irrelevant, however, as we now know that the

Universe is expanding). Einstein had no real physical

interpretation for this constant, and after the expansion of the

Universe was discovered he discarded it again. But with the genie

out of the bottle, the cosmological constant remained in the minds

of cosmologists, and their attitude towards Λ has changed frequently in the past 90

years. Around the turn of the millennium, observations were made

which strongly suggest a non-vanishing cosmological constant, and

the evidence has been further strengthened since, as will be

detailed in Chap. 8. Today we know that our Universe

has a non-zero cosmological constant, or at least something very

similar to it.

. However, if the Λ-term is included, such a solution can

be found (which is irrelevant, however, as we now know that the

Universe is expanding). Einstein had no real physical

interpretation for this constant, and after the expansion of the

Universe was discovered he discarded it again. But with the genie

out of the bottle, the cosmological constant remained in the minds

of cosmologists, and their attitude towards Λ has changed frequently in the past 90

years. Around the turn of the millennium, observations were made

which strongly suggest a non-vanishing cosmological constant, and

the evidence has been further strengthened since, as will be

detailed in Chap. 8. Today we know that our Universe

has a non-zero cosmological constant, or at least something very

similar to it.But the physical interpretation of the

cosmological constant has also been modified. In quantum mechanics

even completely empty space, the so-called vacuum, may have a

finite energy density, the vacuum energy density. For physical

measurements not involving gravity, the value of this vacuum energy

density is of no relevance since those measurements are only

sensitive to energy differences. For example, the energy of

a photon that is emitted in an atomic transition equals the energy

difference between the two corresponding states in the atom. Thus

the absolute energy of a state is measurable only up to a constant.

Only in gravity does the absolute energy become important, because

E = m c 2 implies that it

corresponds to a mass.

It is now found that the cosmological constant is

equivalent to a finite vacuum energy density—the equations of GR,

and thus also the expansion equations, are not affected by this new

interpretation. We will explain this fact in the following.

4.2.5 The components of matter in the Universe

Starting from the equation of energy

conservation (4.14), we will now derive the relativistically

correct expansion equations (4.18)

and (4.19). The only change with respect to the

Newtonian approach in Sect. 4.2.3 will be that we introduce other forms of

matter. The essential components of our Universe can be described

as pressure-free matter, radiation, and vacuum energy.

Pressure-free

matter. The pressure in a gas is determined by the thermal

motion of its constituents. At room temperature, molecules in the

air move at a speed comparable to the speed of sound,  . For such a

gas,

. For such a

gas,  ,

so that its pressure is of course gravitationally completely

insignificant. In cosmology, a substance with P ≪ ρ

c 2 is denoted as (pressure-free) matter, also

called cosmological dust.4We approximate P m = 0, where the index ‘m’

stands for matter. The constituents of the (pressure-free) matter

move with velocities much smaller than c.

,

so that its pressure is of course gravitationally completely

insignificant. In cosmology, a substance with P ≪ ρ

c 2 is denoted as (pressure-free) matter, also

called cosmological dust.4We approximate P m = 0, where the index ‘m’

stands for matter. The constituents of the (pressure-free) matter

move with velocities much smaller than c.

. For such a

gas,

. For such a

gas,  ,

so that its pressure is of course gravitationally completely

insignificant. In cosmology, a substance with P ≪ ρ

c 2 is denoted as (pressure-free) matter, also

called cosmological dust.4We approximate P m = 0, where the index ‘m’

stands for matter. The constituents of the (pressure-free) matter

move with velocities much smaller than c.

,

so that its pressure is of course gravitationally completely

insignificant. In cosmology, a substance with P ≪ ρ

c 2 is denoted as (pressure-free) matter, also

called cosmological dust.4We approximate P m = 0, where the index ‘m’

stands for matter. The constituents of the (pressure-free) matter

move with velocities much smaller than c.Radiation.

If this condition is no longer satisfied, thus if the thermal

velocities are no longer negligible compared to the speed of light,

then the pressure will also no longer be small compared to

ρ c 2. In the

limiting case that the thermal velocity equals the speed of light,

we denote this component as ‘radiation’. One example of course is

electromagnetic radiation, in particular the CMB photons. Another

example would be other particles of vanishing rest mass. Even

particles of finite mass can have a thermal velocity very close to

c if the thermal energy of

the particles is much larger than the rest mass energy, i.e.,

. In these cases, the

pressure is related to the density via the equation of state for

radiation,

. In these cases, the

pressure is related to the density via the equation of state for

radiation,

. In these cases, the

pressure is related to the density via the equation of state for

radiation,

. In these cases, the

pressure is related to the density via the equation of state for

radiation,

(4.20)

The pressure of

radiation. Pressure is defined as the momentum transfer onto

a perfectly reflecting wall per unit time and per unit area.

Consider an isotropic distribution of photons (or another kind of

particle) moving with the speed of light. The momentum of a photon

is given in terms of its energy as  , where h P is the Planck constant.

Consider now an area element dA of the wall; the momentum transferred

to it per unit time is given by the momentum transfer per photon,

times the number of photons hitting the area dA per unit time. We will assume for the

moment that all photons have the same frequency. If θ denotes the direction of a photon

relative to the normal of the wall, the momentum component

perpendicular to the wall before scattering is p ⊥ = pcosθ, and after scattering

, where h P is the Planck constant.

Consider now an area element dA of the wall; the momentum transferred

to it per unit time is given by the momentum transfer per photon,

times the number of photons hitting the area dA per unit time. We will assume for the

moment that all photons have the same frequency. If θ denotes the direction of a photon

relative to the normal of the wall, the momentum component

perpendicular to the wall before scattering is p ⊥ = pcosθ, and after scattering  ; the two other momentum

components are unchanged by the reflection. Thus, the momentum

transfer per photon scattering is Δ p = 2pcosθ. The number of photons scattering per

unit time within the area dA is given by the number density of

photons, n

γ times the area

element dA, times the

thickness of the layer from which photons arrive at the wall per

unit time. The latter is given by ccosθ, since only the perpendicular

velocity component brings them closer to the wall. Putting these

terms together, we find for the momentum transfer to the wall per

unit time per unit area the expression

; the two other momentum

components are unchanged by the reflection. Thus, the momentum

transfer per photon scattering is Δ p = 2pcosθ. The number of photons scattering per

unit time within the area dA is given by the number density of

photons, n

γ times the area

element dA, times the

thickness of the layer from which photons arrive at the wall per

unit time. The latter is given by ccosθ, since only the perpendicular

velocity component brings them closer to the wall. Putting these

terms together, we find for the momentum transfer to the wall per

unit time per unit area the expression

Averaging this expression over a half-sphere (only photons moving

towards the wall can hit it) then yields

Averaging this expression over a half-sphere (only photons moving

towards the wall can hit it) then yields

where u γ = ρ r c 2 is the energy density of

the photons. Since this final expression does not depend on the

photon frequency, the assumption of a mono-chromatic distribution

is not important, and the result applies to any frequency

distribution.

where u γ = ρ r c 2 is the energy density of

the photons. Since this final expression does not depend on the

photon frequency, the assumption of a mono-chromatic distribution

is not important, and the result applies to any frequency

distribution.

, where h P is the Planck constant.

Consider now an area element dA of the wall; the momentum transferred

to it per unit time is given by the momentum transfer per photon,

times the number of photons hitting the area dA per unit time. We will assume for the

moment that all photons have the same frequency. If θ denotes the direction of a photon

relative to the normal of the wall, the momentum component

perpendicular to the wall before scattering is p ⊥ = pcosθ, and after scattering

, where h P is the Planck constant.

Consider now an area element dA of the wall; the momentum transferred

to it per unit time is given by the momentum transfer per photon,

times the number of photons hitting the area dA per unit time. We will assume for the

moment that all photons have the same frequency. If θ denotes the direction of a photon

relative to the normal of the wall, the momentum component

perpendicular to the wall before scattering is p ⊥ = pcosθ, and after scattering  ; the two other momentum

components are unchanged by the reflection. Thus, the momentum

transfer per photon scattering is Δ p = 2pcosθ. The number of photons scattering per

unit time within the area dA is given by the number density of

photons, n

γ times the area

element dA, times the

thickness of the layer from which photons arrive at the wall per

unit time. The latter is given by ccosθ, since only the perpendicular

velocity component brings them closer to the wall. Putting these

terms together, we find for the momentum transfer to the wall per

unit time per unit area the expression

; the two other momentum

components are unchanged by the reflection. Thus, the momentum

transfer per photon scattering is Δ p = 2pcosθ. The number of photons scattering per

unit time within the area dA is given by the number density of

photons, n

γ times the area

element dA, times the

thickness of the layer from which photons arrive at the wall per

unit time. The latter is given by ccosθ, since only the perpendicular

velocity component brings them closer to the wall. Putting these

terms together, we find for the momentum transfer to the wall per

unit time per unit area the expression

Vacuum

energy. The equation of state for vacuum energy takes a very

unusual form which results from the first law of thermodynamics.

Because the energy density ρ v of the vacuum is

constant in space and time, (4.17) immediately yields

the relation

Thus the vacuum energy has a negative pressure. This unusual form

of an equation of state can also be made plausible as follows:

consider the change of a volume V that contains only vacuum. Since the

internal energy is U ∝ V, and thus a growth by dV implies an increase in U, the first law

Thus the vacuum energy has a negative pressure. This unusual form

of an equation of state can also be made plausible as follows:

consider the change of a volume V that contains only vacuum. Since the

internal energy is U ∝ V, and thus a growth by dV implies an increase in U, the first law  demands that

P be negative.

demands that

P be negative.

(4.21)

demands that

P be negative.

demands that

P be negative.4.2.6 “Derivation” of the expansion equation

Beginning with the equation of energy

conservation (4.14), we are now able to derive the expansion

equations (4.18) and (4.19). To achieve this,

we differentiate both sides of (4.14) with respect to

t and obtain

Next, we carry out the differentiation in (4.17), thereby obtaining

Next, we carry out the differentiation in (4.17), thereby obtaining

.

This relation is then used to replace the term containing

.

This relation is then used to replace the term containing

in the previous equation, yielding

in the previous equation, yielding

This derivation therefore reveals that the pressure term in the

equation of motion results from the combination of energy

conservation and the first law of thermodynamics. However, we point

out that the first law in the form (4.17) is based explicitly

on the equivalence of mass and energy, resulting from Special

Relativity. When assuming this equivalence, we indeed obtain the

Friedmann equations from Newtonian cosmology, as expected from the

discussion at the beginning of Sect. 4.2.1.

This derivation therefore reveals that the pressure term in the

equation of motion results from the combination of energy

conservation and the first law of thermodynamics. However, we point

out that the first law in the form (4.17) is based explicitly

on the equivalence of mass and energy, resulting from Special

Relativity. When assuming this equivalence, we indeed obtain the

Friedmann equations from Newtonian cosmology, as expected from the

discussion at the beginning of Sect. 4.2.1.

.

This relation is then used to replace the term containing

.

This relation is then used to replace the term containing

in the previous equation, yielding

in the previous equation, yielding

(4.22)

Next we consider the three aforementioned

components of the cosmos and write the density and pressure as the

sum of dust, radiation, and vacuum energy,

where ρ m+r

combines the density in matter and radiation. In the second

equation, the pressureless nature of matter, P m = 0, was used so that

where ρ m+r

combines the density in matter and radiation. In the second

equation, the pressureless nature of matter, P m = 0, was used so that

. By inserting the

first of these equations into (4.14), we indeed obtain

the first Friedmann equation (4.18) if the density

ρ there is identified with

ρ m+r (the

density in ‘normal matter’), and if

. By inserting the

first of these equations into (4.14), we indeed obtain

the first Friedmann equation (4.18) if the density

ρ there is identified with

ρ m+r (the

density in ‘normal matter’), and if

Furthermore, we insert the above decomposition of density and

pressure into the equation of motion (4.22) and immediately

obtain (4.19) if we identify ρ and P with ρ m+r and

Furthermore, we insert the above decomposition of density and

pressure into the equation of motion (4.22) and immediately

obtain (4.19) if we identify ρ and P with ρ m+r and  , respectively.

Hence, this approach yields both Friedmann equations; the density

and the pressure in the Friedmann equations refer to normal matter,

i.e., all matter except the contribution by Λ. Alternatively, the Λ-terms in the Friedmann equations may

be discarded if instead the vacuum energy density and its pressure

are explicitly included in P and ρ.

, respectively.

Hence, this approach yields both Friedmann equations; the density

and the pressure in the Friedmann equations refer to normal matter,

i.e., all matter except the contribution by Λ. Alternatively, the Λ-terms in the Friedmann equations may

be discarded if instead the vacuum energy density and its pressure

are explicitly included in P and ρ.

. By inserting the

first of these equations into (4.14), we indeed obtain

the first Friedmann equation (4.18) if the density

ρ there is identified with

ρ m+r (the

density in ‘normal matter’), and if

. By inserting the

first of these equations into (4.14), we indeed obtain

the first Friedmann equation (4.18) if the density

ρ there is identified with

ρ m+r (the

density in ‘normal matter’), and if

(4.23)

, respectively.

Hence, this approach yields both Friedmann equations; the density

and the pressure in the Friedmann equations refer to normal matter,

i.e., all matter except the contribution by Λ. Alternatively, the Λ-terms in the Friedmann equations may

be discarded if instead the vacuum energy density and its pressure

are explicitly included in P and ρ.

, respectively.

Hence, this approach yields both Friedmann equations; the density

and the pressure in the Friedmann equations refer to normal matter,

i.e., all matter except the contribution by Λ. Alternatively, the Λ-terms in the Friedmann equations may

be discarded if instead the vacuum energy density and its pressure

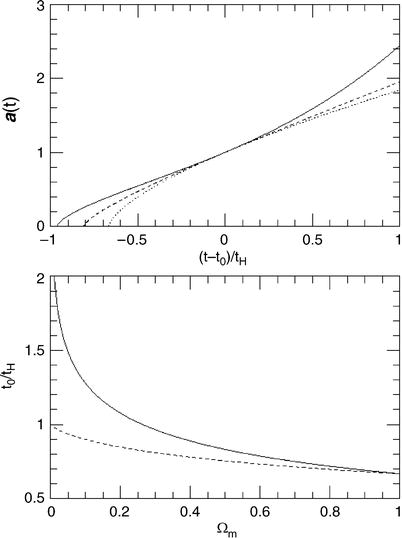

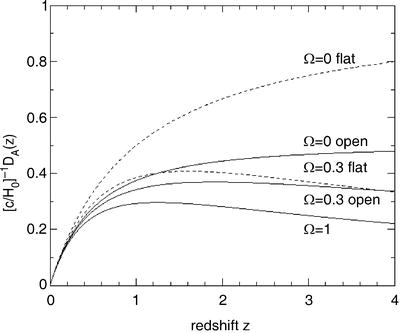

are explicitly included in P and ρ.4.2.7 Discussion of the expansion equations

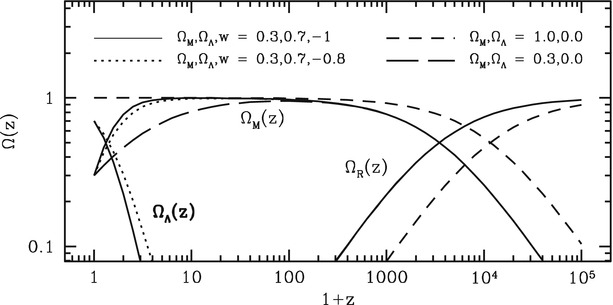

Following the ‘derivation’ of the expansion

equations, we will now discuss their consequences. First we

consider the density evolution of the various cosmic components

resulting from (4.17). For pressure-free matter, we immediately

obtain  which is in

agreement with (4.11). Inserting the equation of

state (4.20) for radiation into (4.17) yields the behavior

which is in

agreement with (4.11). Inserting the equation of

state (4.20) for radiation into (4.17) yields the behavior

; the vacuum

energy density is a constant in time. Hence

; the vacuum

energy density is a constant in time. Hence

where the index ‘0’ indicates the current time, t = t 0. The physical origin of

the a −4

dependence of the radiation density is seen as follows: as for

matter, the number density of photons changes

where the index ‘0’ indicates the current time, t = t 0. The physical origin of

the a −4

dependence of the radiation density is seen as follows: as for

matter, the number density of photons changes  because the number of photons in a

comoving volume is unchanged. However, photons are redshifted by

the cosmic expansion. Their wavelength λ changes proportional to a (see Sect. 4.3.2). Since the energy

of a photon is E = h P ν and

because the number of photons in a

comoving volume is unchanged. However, photons are redshifted by

the cosmic expansion. Their wavelength λ changes proportional to a (see Sect. 4.3.2). Since the energy

of a photon is E = h P ν and  , the energy of a photon changes as

a −1 due to

cosmic expansion so that the photon energy density

changes ∝ a

−4.

, the energy of a photon changes as

a −1 due to

cosmic expansion so that the photon energy density

changes ∝ a

−4.

which is in

agreement with (4.11). Inserting the equation of

state (4.20) for radiation into (4.17) yields the behavior

which is in

agreement with (4.11). Inserting the equation of

state (4.20) for radiation into (4.17) yields the behavior

; the vacuum

energy density is a constant in time. Hence

; the vacuum

energy density is a constant in time. Hence

(4.24)

because the number of photons in a

comoving volume is unchanged. However, photons are redshifted by

the cosmic expansion. Their wavelength λ changes proportional to a (see Sect. 4.3.2). Since the energy

of a photon is E = h P ν and

because the number of photons in a

comoving volume is unchanged. However, photons are redshifted by

the cosmic expansion. Their wavelength λ changes proportional to a (see Sect. 4.3.2). Since the energy