The past decade has been a tremendously active

and fruitful time for extragalactic astronomy and cosmology, as

hopefully is well documented in the previous chapters. Here, we

will try to see what progress we may expect for the near and not-so

near future.

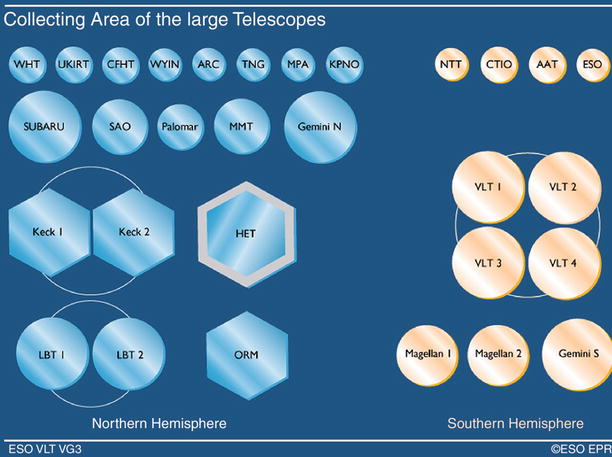

Fig. 11.1

The collecting area of large optical

telescopes is displayed. Those in the Northern hemisphere are shown

on the left, whereas

southern telescopes are shown on the right. The joint collecting area of

these telescopes has been increased by a large factor over the past

two decades: only the telescopes shown in the upper row plus the 5-m Palomar

telescope and the 6-m SAO were in operation before 1993. If, in

addition, the parallel development of detectors is considered, it

is easy to understand why observational astronomy is making such

rapid progress. Credit: European Southern Observatory

11.1 Continuous progress

Progress in (extragalactic) astronomy is achieved

through information obtained from increasingly improving

instruments and by refining our theoretical understanding of

astrophysical processes, which in turn is driven by observational

results. It is easy to foresee that the evolution of instrumental

capabilities will continue rapidly in the near future, enabling us

to perform better and more detailed studies of cosmic sources.

Before we will mention some of the forthcoming astronomical

facilities, it should be pointed out that some of the recently

started projects have at best skimmed the cream, and the bulk of

the results is yet to come. This concerns the scientific output

from the Herschel and Planck satellite missions, as well as the

recently commissioned ALMA interferometer, which has already

provided exciting results. The great scientific capabilities of

these facilities have been impressively documented, so it is easy

to predict that far more scientific breakthroughs are waiting to be

achieved with them.

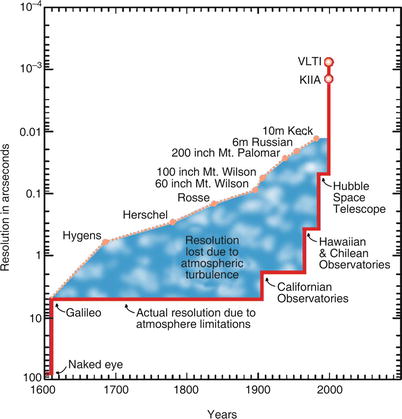

Fig. 11.2

This figure illustrates the evolution of

angular resolution as a function of time. The upper dotted curve describes the

angular resolution that would be achieved in the case of

diffraction-limited imaging, which depends, at fixed wavelength,

only on the aperture of the telescope. Some historically important

telescopes are indicated. The lower curve shows the angular

resolution actually achieved. This is mainly limited by atmospheric

turbulence, i.e., seeing, and thus is largely independent of the

size of the telescope. Instead, it mainly depends on the quality of

the atmospheric conditions at the observatories. For instance, we

can clearly recognize how the opening of the observatories on Mount

Palomar, and later on Mauna Kea, La Silla and Paranal have lead to

breakthroughs in resolution. A further large step was achieved with

HST, which is unaffected by atmospheric turbulence and is therefore

diffraction limited. Adaptive optics and interferometry

characterize the next essential improvements. Credit: European

Southern Observatory

Within a relatively short period of ∼ 15 years,

the total collecting area of large optical telescopes has increased

by a large factor, as is illustrated in Fig. 11.1. At the present time,

13 telescopes with apertures above 8 m (and four more with an

aperture of 6.5 m) are in operation, the first of which, Keck I,

was put into operation in 1993. In addition, the development of

adaptive optics allows us to obtain diffraction-limited angular

resolution from ground-based observations (see Fig. 11.2).

The capability of existing telescopes gets

continuously improved by installing new sensitive instrumentation.

The successful first generation of instruments for the 10-m class

telescopes gets replaced step-by-step by more powerful instruments.

As an example, the Subaru telescope will be equipped with Hyper

Suprime-Cam, a 1. 5 deg2 camera, by far the largest of

its kind on 10-m class telescope. This instrument will allow the

conduction of large-area deep imaging surveys, e.g., for cosmic

shear studies.

In another step to improve angular resolution,

optical and NIR interferometry will increasingly be employed. For

example, the two Keck telescopes (Fig. 1.38) are mounted such that they can

be used for interferometry. The four unit telescopes of the VLT can

be combined, either with each other or with additional (auxiliary)

smaller telescopes, to act as an interferometer (see

Fig. 1.39). The auxiliary telescopes can

be placed at different locations, thus yielding different baselines

and thereby increasing the coverage in angular resolution. Finally,

the Large Binocular Telescope (LBT, see Fig. 1.44), which consists of two 8.4-m

telescopes mounted on the same platform, was developed and

constructed for the specific purpose of optical and NIR

interferometry.

From HST to its

successor. The Hubble Space Telescope has turned out to be

the most successful astronomical observatory of all time (although

it certainly was also the most expensive one).1 The importance of HST for

extragalactic astronomy is not least based on the characteristics

of galaxies at high redshifts. Before the launch of HST, it was not

known that such objects are small and therefore have, at a given

flux, a high surface brightness. This demonstrates the advantage of

the high resolution that is achieved with HST. Several service

missions to the observatory led to the installation of new and more

powerful instruments which have continuously improved the capacity

of HST. With the Space Shuttle program abandoned, no more service

to Hubble is possible, and it is only a matter of time before

essential parts will start to malfunction.

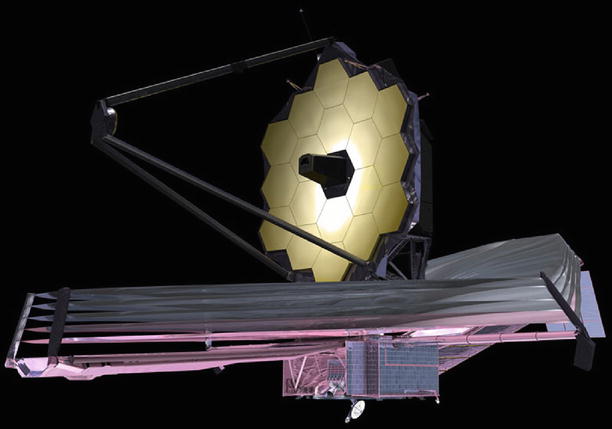

Fig. 11.3

Artist’s impression of the 6.5-m James Webb

Space Telescope. Like the Keck telescopes, the mirror is segmented

and protected against Solar radiation by a giant heat shield,

having the size of a tennis court. Keeping the mirror and the

instruments permanently in the shadow will permit a passive cooling

at a temperature of ∼ 35 K. This will be ideal for conducting

observations at NIR wavelengths, with unprecedented sensitivity.

Credit: NASA

Fortunately, the successor of HST is already at

an advanced stage of construction and is currently scheduled to be

launched in 2018. This Next Generation Space Telescope (which was

named James Webb Space Telescope—JWST; see Fig. 11.3) will have a mirror

of 6.5-m diameter and therefore will be substantially more

sensitive than HST. Furthermore, JWST will be optimized for

observations in the NIR (1–5 μm) and thus be able, in particular,

to observe sources at high redshifts whose stellar light is

redshifted into the NIR regime of the spectrum.

We hope that JWST will be able to observe the

first galaxies and the first AGNs, i.e., those sources responsible

for reionizing the Universe. Besides a NIR camera, JWST will carry

the first multi-object spectrograph in space, which is optimized

for spectroscopic studies of high-redshift galaxy samples and whose

sensitivity will exceed that of all previous instruments by a huge

factor. Furthermore, JWST will carry a MIR instrument which was

developed for imaging and spectroscopy in the wavelength range

5 μm ≤ λ ≤ 28 μm.

11.2 New facilities

There are research fields where a single

instrument or telescope can yield a breakthrough—an example would

be the determination of cosmological parameters from measurements

of the CMB anisotropies. However, most of the questions in

(extragalactic) astronomy can only be solved by using observations

over a broad range of wavelengths; for example, our understanding

of AGNs would be much poorer if we did not have the panchromatic

view, from the radio regime to TeV energies. Our inventory of

powerful facilities is going to be further improved, as the

following examples should illustrate.

New radio

telescopes. The Square Kilometer Array (SKA) will be the

largest radio telescope in the world and will use a technology

which is quite different from that of current telescopes. For SKA,

the beams of the telescope will be digitally generated on

computers. Such digital radio interferometers not only allow a much

improved sensitivity and angular resolution, but they also enable

us to observe many different sources in vastly different sky

regions simultaneously. SKA will consist of about 3000 15-m dishes

as well as two other types of radio wave receivers, known as

aperture array antennas. Together, the receiving area amounts to

about one square kilometer. The telescopes are spread over a

region ∼ 3000 km is size, yielding an angular resolution of

0.″02 at ν = 1. 4 GHz, and are linked by optical

fibers (with a total length of almost 105 km). The

instantaneous field-of-view at frequencies ≳ 1 GHz will

be ∼ 1 deg2, increasing to ∼ 200 deg2 for

lower frequencies. This huge (in terms of current radio

interferometers) field-of-view is achieved by synthesizing multiple

beams using software. The limits of such instruments are no longer

bound by the properties of the individual antennas, but rather by

the capacity of the computers which analyze the data. SKA will

provide a giant boost to astronomy; for the first time ever, the

achievable number density of sources on the radio sky will be

comparable to or even larger than that in the optical.

SKA is not the first of this new kind of radio

telescopes. The first one is the Low-Frequency Array (LOFAR),

centered in the Netherlands but with several stations located in

neighboring countries to increase the baseline and thus the angular

resolution. LOFAR, operating at ν ≲ 250 MHz, can be considered as a

pathfinder for the low-frequency part of SKA. LOFAR began its

routine operation at the end of 2012. Other pathfinder

observatories for SKA include the Australian Square Kilometre Array

Pathfinder (ASKAP), and MeerKAT in South Africa.

Fig. 11.4

Artist’s impression of the CCAT telescope,

a planned 25-m sub-millimeter telescope to be built in Chile. At an

altitude of 5600 m, it will be highest altitude ground-based

telescope world-wide. Credit: Cornell University &

Caltech

To avoid terrestrial radio emission as much as

possible, the SKA will we constructed in remote places in Australia

and South Africa. The remoteness brings with it several great

challenges—to mention just one, the power supply will most likely

be decentralized, i.e., obtained through Solar panels near the

telescopes to generate electricity. The data rate to be transmitted

is far larger than the current global Internet traffic! To process

the huge data stream, one needs a computer capable of about 100

petaflops per second—such a computer does not yet exist (at least

not with access for scientists).

The scientific outcome from SKA and its

pathfinders is expected to be truly revolutionary. To mention just

a few: The epoch of reionization will be studied by the redshifted

21-cm hydrogen line; a detailed time- and spatially dependent

picture of the reionization process will be obtained. Normal

galaxies can be studied via their 21-cm and their continuum

(synchrotron) emission out to large redshifts, with an angular

resolution better than that of HST. Since the Hi-line yields the redshift of the

galaxies, large redshift surveys can be employed for studies of the

large-scale structure, including baryonic acoustic oscillations.

The beam of the interferometer represents the point-spread

function, and is very well known. Weak gravitational lensing

studies using the radio emission from normal galaxies can thus make

use of that knowledge to correct the measured image shapes.

New

(Sub-)millimeter telescopes. The Large Millimeter Telescope

(LMT) on the Volcán Sierra Negra, Mexico, is a 50-m radio telescope

that recently went into operation (though in its first phase, the

inner 32-m diameter of its primary surface will be fully

installed). The LMT will observe in the range

0. 85 mm ≤ λ ≤ 4 mm. In

addition to the much increased surface area compared to existing

single-dish telescopes in this wavelength regime, the large

aperture will provide an important step forward in angular

resolution, and thus provide far more accurate positions of

(sub-)mm sources. The Cerro Chajnantor Atacama Telescope (CCAT,

Fig. 11.4) is

a planned 25-m sub-millimeter telescope, to be built close to the

site of ALMA, but at a higher altitude of ∼ 5600 m. This ∼ 600 m

difference in altitude yields a further decrease of the water vapor

column, and thus increases the sensitivity of the observatory.

Equipped with powerful instruments, and a 20 arcmin field-of-view,

CCAT will be able to map large portions of the sky quickly; it is

expected that the CCAT will have a survey speed ∼ 1000 times faster

than the SCUBA-2 camera (see Sect. 1.3.1). CCAT will carry out large

surveys for SMGs over a broad redshift range, and may be able to

probe the earliest bursts of dusty star formation out to

z ∼ 10. CCAT will also be a

powerful telescope for studying the Sunyaev–Zeldovich effect in

galaxy clusters, and thus conduct cluster cosmology surveys. Last

but not least, it will provide targets for observations with the

ALMA interferometer, and in combination allows the joint

reconstruction of compact and extended source components.

The next step in

astrometry: Gaia. The ESA satellite mission Gaia, which was

launched in Dec. 2013, will conduct astrometry of ∼ 109

stars in the Milky Way, and provide very precise positions, proper

motions and parallaxes of these stars. It is thus much more

powerful than the previous astrometry satellite Hipparcos, and will

provide us with a highly detailed three-dimensional map of our

Galaxy, allowing precise dynamical studies, including the study of

the total (dark +luminous) matter in the Milky Way and providing

tests of General Relativity. Gaia will determine the distances to a

large number of Cepheids, thus greatly improving the calibration of

the period-luminosity relation which is one of the key elements for

determining the Hubble constant in the local Universe. In addition,

Gaia is expected to detect ∼ 5 × 105 AGNs.

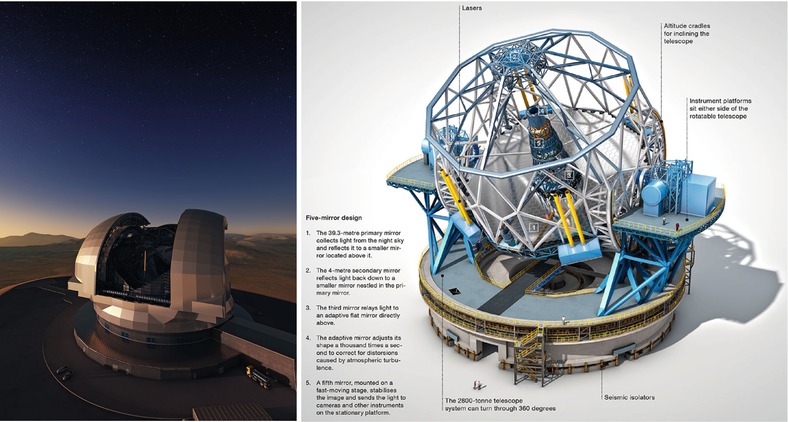

Fig. 11.5

Artist impression of the planned European

Extremely Large Telescope, a 39-m telescope. Credit: European

Southern Observatory

Giant

near-IR/optical telescopes. A new era in observational

astronomy will start with the installation of optical telescopes

with an aperture of ∼ 30 m or larger. Three such giant telescope

projects are currently in their planning stage. One of them is

ESO’s European Extremely Large Telescope (E-ELT, see

Fig. 11.5),

with a 39-m primary mirror (this mirror has about the same area as

all the telescopes displayed in Fig. 11.1 together!). It will

be built on Cerro Armazones, Chile, at an altitude of slightly more

than 3000 m, not very far away from the Paranal, the mountain that

hosts the VLT. The other two projects are the Thirty Meter

Telescope (TMT), to be built on Mauna Kea in Hawaii, and the Giant

Magellan Telescope (GMT) close to Gemini-South in Chile. All these

telescopes will have segmented mirrors, similar to the Keck

telescopes (Fig. 1.38), and sophisticated adaptive

optics, to achieve the angular resolution corresponding to the

diffraction limit of the telescope. For example, the E-ELT primary

mirror will consist of 798 hexagonal segments, each with a size of

1. 4 m. The secondary mirror will have a diameter of 4 m, which by

itself would be a sizeable telescope aperture.

With their huge light-gathering power, these

giant telescopes will open totally new opportunities. One expects

to observe the most distant objects in the Universe, the first

galaxies and AGNs which ionized the Universe. The cosmic expansion

rate can be studied directly, by measuring the change of redshift

of Lyα forest lines with

time. High-resolution spectroscopy will enable studying the time

evolution of chemical enrichment throughout cosmic history.

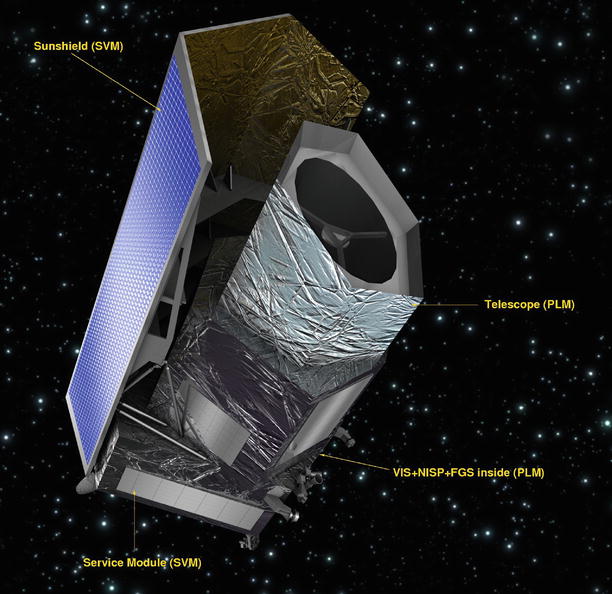

Fig. 11.6

The Euclid satellite, designed to provide

a ∼ 15 000 deg2 imaging survey in the optical and

near-IR, together with NIR slitless spectroscopy. Credit: European

Space Agency

Optical/near-IR

wide-field survey telescopes. The huge impact of the SDSS

has demonstrated the versatile use of large surveys in astronomy.

Several multi-band wide-field deep imaging surveys are currently

ongoing, of which we mention two: The Kilo Degree Survey carried

out with the VLT Survey Telescope on Paranal (see Fig. 1.39) and complemented with the

near-IR VIKING survey with the VISTA telescope,

covering ∼ 1500 deg2 in nine bands. The Dark Energy

Survey (DES) will image ∼ 5000 deg2 with a newly

designed camera on the Blanco Telescope, located at Cerro Tololo

Inter-American Observatory (CTIO) in Chile. Both of these surveys

have a wide range of science goals, including weak lensing and

cosmic shear, the identification of galaxy clusters found at

different wavebands (X-ray surveys, Sunyaev–Zeldovich surveys), and

the large-scale distribution of galaxies and AGN, to name a

few.

However, a revolution in survey astronomy will

occur with the Euclid satellite (Fig. 11.6). Motivated by the

empirical study of the properties of dark energy, Euclid will

observe essentially the full extragalactic sky

( ∼ 15 000 deg2) in one broad optical band, and three

near-IR bands, making use of its ∼ 0. 5 deg2 focal

plane. The optical images will be used for a cosmic shear study and

thus profit from the high resolution images obtainable from space.

The fact that Euclid will be in an orbit around L2 implies that one

can expect a very high stability of the telescope and instruments,

which is an essential aspect for the correction of galaxy images

with respect to PSF effects. The near-IR images are essential for

obtaining photometric redshifts of the sources whose shapes are

used in the lensing analysis. For the same reason, the Euclid data

will be supplemented with images in additional optical bands, to be

obtained with ground-based telescopes. Altogether, we expect to

measure the shapes of some two billion galaxies. In addition,

Euclid will conduct slitless spectroscopy of some 50 million

galaxies over a broad range in redshift, to yield a measurement of

baryonic acoustic oscillations at z > 1. Euclid is scheduled for

launch by the end of this decade.

Whereas the primary science goal of Euclid is

dark energy, the mission will leave an enormous scientific legacy.

Compared to the 2MASS all-sky infrared survey (Fig. 1.52), Euclid is about 7 magnitudes

deeper, and will image almost 1000 times more galaxies in the

near-IR bands. Together with the optical multi-color information,

this data set will indeed provide a giant step in studies of the

cosmos at large.

Sometimes nice surprises happen! We have stressed

many times the incredible value of the Hubble Space Telescope for

astronomy. In the summer of 2012, NASA received an offer from the

US National Reconnaissance Office (NRO) intelligence agency for two

telescopes which are very similar to the HST, originally planned as

spy satellites, but never used. These telescopes have a shorter

focal length than HST, allowing a much wider field-of-view. This

offer came in handy, as it fits well into the plan to build a Wide

Field Infrared Space Telescope (WFIRST), operating in the near-IR.

Its planned NIR camera has a field-of-view ∼ 200 times larger than

the WFC3 onboard HST. Its science goals are manifold, including

supernova cosmology, baryonic acoustic oscillations, cosmic shear,

and galaxy evolution studies.

A further ambitious project regarding optical sky

surveys is the Large Synoptic Survey Telescope (LSST), an 8.4-m

telescope in Chile equipped with a huge camera covering

9. 6 deg2. It is designed to survey half the sky in six

optical bands every 4 days, taking short exposures. After 10 years,

the coadded image from all the short exposures will yield a sky map

at a depth of m ∼ 27. 5. A

projected start of operations is 2022.

Apart from the telescopes and instrumentation,

these projects are extremely demanding in terms of data storage and

computing power. To wit, LSST will yield a data rate of ∼ 15 TB per

night, and this raw data has to be reduced also within a

day—to avoid any serious backlock. It is expected that most of the

resources for this project will be invested into computer power and

software for data storage and analysis.

A new X-ray

all-sky survey. Twenty-five years after Rosat carried out

its all-sky survey, the German-Russian mission Spectrum-X-Gamma

will carry the eROSITA (extended ROentgen Survey with an Imaging

Telescope Array) instrument, an X-ray telescope operating between

0.5 and ∼ 10 keV. eROSITA, expected to be launched at the end of

2015, will be located at L2 and carry out eight full sky surveys.

The coadded sky survey will be ∼ 20 deeper than the RASS, and

extend to higher photon energies. The all-sky survey is expected to

detect ∼ 105 clusters of galaxies, many of them at

redshifts larger than unity, and ∼ 3 × 106 AGNs. For the

latter, the higher energy band will be particularly useful. This

will not only provide a much improved statistical basis for

studying the AGN population over cosmic time, but also allow us to

map the large-scale structure as traced by AGNs, including the

study of baryonic acoustic oscillations. Together with cluster

cosmology, eROSITA will be of great value for extragalactic

astronomy and cosmology.

The scientific exploitation of the eROSITA data

will depend significantly on the availability of auxiliary data for

the identification and redshift estimates of the detected sources.

The wide-field optical and near-IR imaging surveys described

before—KiDS/VIKING, DES, PanSTARRS, and later, Euclid and LSST—will

be invaluable for the eROSITA analysis. Furthermore, spectrographs

with large multiplexing capabilities will allow to obtain spectra

for millions of sources in the e-ROSITA catalog, including the AGNs

and galaxies in the detected clusters.

New windows to the

Universe will be opened. The first gravitational wave

antennas are already in place, and their upgraded versions, to go

into operation around 2016, will probably be able to discover the

signals from relatively nearby supernova explosions or mergers of

compact binaries. With the Laser Interferometer Space Antenna

(LISA) , mergers of supermassive black holes will become detectable

throughout the visible Universe, as we mentioned before. Giant

neutrino detectors will open the field of neutrino astronomy and

will be able, for example, to observe processes in the innermost

parts of AGNs.

Theory.

Parallel to these developments in telescopes and instruments,

theory is progressing steadily. The continuously increasing

capacity of computers available for numerical simulations is only

one aspect, albeit an important one. New approaches for modeling,

triggered by new observational results, are of equal importance.

The close connection between theory, modeling, and observations

will become increasingly important since the complexity of data

requires an advanced level of modeling and simulations for their

quantitative interpretation.

Data availability;

virtual observatories. The huge amount of data obtained with

current and future instruments is useful not only for the observers

taking the data, but also for others in the astronomical community.

Realizing this fact, many observatories have set up archives from

which data can be retrieved—essentially by everyone. Space

observatories pioneered such data archives, and a great deal of

science results from the use of archival data. Examples here are

the use of the HST deep fields by a large number of researchers, or

the analysis of serendipitous sources in X-ray images which led to

the EMSS (see Sect. 6.4.5). Together with the fact that

an understanding of astronomical sources usually requires data

taken over a broad range of frequencies, there is a strong

motivation for the creation of virtual observatories : infrastructures

which connect archives containing astronomical data from a large

variety of instruments and which can be accessed electronically. In

order for such virtual observatories to be most useful, the data

structures and interfaces of the various archives need to become

mutually compatible. Intensive activities in creating such virtual

observatories are ongoing; they will doubtlessly play in

increasingly important role in the future.

11.3 Challenges

Understanding

galaxy evolution. One of the major challenges for the next

few years will certainly be the investigation of the very distant

Universe, studying the evolution of cosmic objects and structures

at very high redshift up to the epoch of reionization. To relate

the resulting insights of the distant Universe to those obtained

more locally and thus to obtain a consistent view about our cosmos,

major theoretical investigations will be required as well as

extensive observations across the whole redshift range, using the

broadest wavelength range possible. Furthermore, the new astrometry

satellite Gaia will offer us the unique opportunity to study

cosmology in our Milky Way. With Gaia, the aforementioned stellar

streams, which were created in the past by the tidal disruption of

satellite galaxies during their merging with the Milky Way, can be

verified. New insights gained with Gaia will certainly also improve

our understanding of other galaxies.

Dark

matter. The second major challenge for the near future is

the fundamental physics on which our cosmological model is based.

From observations of galaxies and galaxy clusters, and also from

our determinations of the cosmological parameters, we have verified

the presence of dark matter. Since there seem to be no plausible

astrophysical explanations for its nature, dark matter most likely

consists of new kinds of elementary particles. Two different

strategies to find these particles are currently being followed.

First, experiments aim at directly detecting these particles, which

should also be present in the immediate vicinity of the Earth.

These experiments are located in deep underground laboratories,

thus shielded from cosmic rays. Several such experiments, which are

an enormous technical challenge due to the sensitivity they are

required to achieve, are currently running. They will obtain

increasingly tighter constraints on the properties of WIMPs with

respect to their mass and interaction cross-section. Such

constraint will, however, depend on the mass model of the dark

matter in our Galaxy. As a second approach, the Large Hadron

Collider at CERN will continue to search for indications for an

extension of the Standard Model of particle physics, which would

predict the presence of additional particles, including the dark

matter candidate.

Dark

energy. Whereas at least plausible ideas exist about the

nature of dark matter which can be experimentally tested in the

coming years, the presence of a non-vanishing density of dark

energy, as evidenced from cosmology, presents an even larger

mystery for fundamental physics. Though from quantum physics we

might expect a vacuum energy density to exist, its estimated energy

density is tremendously larger than the cosmic dark energy density.

The interpretation that dark energy is a quantum mechanical vacuum

energy therefore seems highly implausible. As astrophysical

cosmologists, we could take the view that vacuum energy is nothing

more than a cosmological constant, as originally introduced by

Einstein; this would then be an additional fundamental constant in

the laws of nature. From a physical point of view, it would be much

more satisfactory if the nature of dark energy could be derived

from the laws of fundamental physics. The huge discrepancy between

the density of dark energy and the simple estimate of the vacuum

energy density clearly indicates that we are currently far from a

physical understanding of dark energy. To achieve this

understanding, we might well assume that a new theory must be

developed which unifies quantum physics and gravity—in a manner

similar to the way other ‘fundamental’ interactions (like

electromagnetism and the weak force) have been unified within the

standard model of particle physics. Deriving such a theory of

quantum gravity turns out to be enormously problematic despite

intensive research over several decades. However, the density of

dark energy is so incredibly small that its effects can only be

recognized on the largest length-scales, implying the necessity of

further astronomical and cosmological experiments. Only

astronomical techniques are able to probe the properties of dark

energy empirically. We have outlined in Sect. 8.8 the most promising ways of

studying the properties of dark energy, and the new facilities

described above will allow us to make essential progress over the

next decade.

Inflation.

Although inflation is currently part of the standard model of

cosmology, the physical processes occurring during the inflationary

phase have not been understood up to now. The fact that different

field-theoretical models of inflation yield very similar

cosmological consequences is an asset for cosmologists: from their

point-of-view, the details of inflation are not immediately

relevant, as long as a phase of exponential expansion occurred. But

the same fact indicates the size of the problem faced in studying

the process of inflation, since different physical models yield

rather similar outcomes with regard to cosmological observables.

Perhaps the most promising probe of inflation is the polarization

of the cosmic microwave background, since it allows us to study

whether, and with what amplitude, gravitational waves were

generated during inflation. Predictions of the ratio between the

amplitudes of gravitational waves and that of density fluctuations

are different in different physical models of inflation. A

successor of the Planck satellite, in form of a mission which is

able to measure the CMB polarization with sufficient accuracy for

testing inflation, will probably be considered.

Baryon

asymmetry. Another cosmological observation poses an

additional challenge to fundamental physics. We observe baryonic

matter in our Universe, but we see no signs of appreciable amounts

of antimatter. If certain regions in the Universe consisted of

antimatter, there would be observable radiation from

matter-antimatter annihilation at the interface between the

different regions. The question therefore arises, what processes

caused an excess of matter over antimatter in the early Universe?

We can easily quantify this asymmetry—at very early times, the

abundance of protons, antiprotons and photons were all quite

similar, but after proton-antiproton annihilation at T ∼ 1 GeV, a fraction

of ∼ 10−10—the current baryon-to-photo ratio—was left

over. This slight asymmetry of the abundance of protons and

neutrons over their antiparticles in the early Universe, often

called baryogenesis , has not been explained in the framework of

the standard model of particle physics. Furthermore, we would like

to understand why the densities of baryons and dark matter are

essentially the same, differing by a mere factor of ∼ 6.

The aforementioned issues are arguably the best

examples of the increasingly tight connection between cosmology and

fundamental physics. Progress in either field can only be achieved

by the close collaboration between theoretical and experimental

particle physics and astronomy.

Sociological

challenges. Astronomy has become Big Science, not only in

the sense that our facilities are getting more expensive, in

parallel to their increased capabilities, but also in terms of the

efforts needed to conduct individual science projects. Although

most research projects are still carried out in small

collaborations, this is changing drastically for some of the most

visible projects. One indication is the growing average number of

authors per publication, which doubled between 1990 and 2006 from 3

to 6, with a clearly increasing trend. Whereas many papers are

authored by less than a handful of people, there is an increasing

number of publications with long author lists: the typical H.E.S.S.

publication now has ≳ 200 authors, the Planck papers of order 250.

One consequence of these large collaborations is that a young

postdoc or PhD student may find it more difficult to find her or

his name as lead author, and thus to become better known to the

astrophysical community. We need to cope with this non-reversible

trend; other scientific communities, like the particle physicists,

have done so successfully.

Is cosmology on

the right track? Finally, and perhaps too late in the

opinion of some readers, we should note again that this book has

assumed throughout that the physical laws, as we know them today,

can be used to interpret cosmic phenomena. We have no real proof

that this assumption is correct, but the successes of this approach

justify this assumption in hindsight. Constraints on possible

variation of physical ‘constants’ with time are getting

increasingly tighter, providing additional justification. If this

assumption had been grossly violated, there would be no reason why

the values of the cosmological parameters, estimated with vastly

different methods and thus employing very different physical

processes, mutually agree. The price we pay for the acceptance of

the standard model of cosmology, which results from this approach,

is high though: the standard model implies that we accept the

existence and even dominance of dark matter and dark energy in the

Universe.

Not every cosmologist is willing to pay this

price. For instance, M. Milgrom introduced the hypothesis that

the flat rotation curves of spiral galaxies are not due to the

existence of dark matter. Instead, they could arise from the

possibility that the Newtonian law of gravity ceases to be valid on

scales of 10 kpc—on such large scales, and the correspondingly

small accelerations, the law of gravity has not been tested.

Milgrom’s Modified Newtonian

Dynamics (MOND) is therefore a logically possible

alternative to the postulate of dark matter on scales of galaxies.

Indeed, MOND offers an explanation for the Tully–Fisher relation of

spiral galaxies.

There are, however, several reasons why only a

few astrophysicists follow this approach. MOND has an additional

free parameter which is fixed by matching the observed rotation

curves of spiral galaxies with the model, without invoking dark

matter. Once this parameter is fixed, MOND cannot explain the

dynamics of galaxies in clusters without needing additional

matter—dark matter. Thus, the theory has just enough freedom to fix

a problem on one length- (or mass-)scale, but apparently fails on

different scales. We can circumvent the problem again by

postulating warm dark matter, which would be able to fall into the

potential wells of clusters, but not into the shallower ones of

galaxies, thereby replacing one kind of dark matter (CDM) with

another. In addition, the fluctuations of the cosmic microwave

background radiation cannot be explained without the presence of

dark matter.

In fact, the consequences of accepting MOND would

be far reaching: if the law of gravity deviates from the Newtonian

law, the validity of General Relativity would be questioned, since

it contains the Newtonian force law as a limiting case of weak

gravitational fields. General Relativity, however, forms the basis

of our world models. Rejecting it as the correct description of

gravity, we would lose the physical basis of our cosmological

model—and thus the impressive quantitative agreement of results

from vastly different observations that we described in

Chap. 8. The acceptance of MOND therefore

demands an even higher price than the existence of dark matter, but

it is an interesting challenge to falsify MOND empirically.

This example shows that the modification of one

aspect of our standard model has the consequence that the whole

model is threatened: due to the large internal consistency of the

standard model, modifying one aspect has a serious impact on all

others. This does not mean that there cannot be other cosmological

models which can provide as consistent an explanation of the

relevant observational facts as our standard model does. However,

an alternative explanation of a single aspect cannot be considered

in isolation, but must be seen in its relation to the others. Of

course, this poses a true challenge to the promoters of alternative

models: whereas the overwhelming majority of cosmologists are

working hard to verify and to refine the standard model and to

construct the full picture of cosmic evolution, the group of

researchers working on alternative models is small2 and thus hardly able to put

together a convincing and consistent model of cosmology. This fact

finds its justification in the successes of the standard model, and

in the agreement of observations with the predictions of this

model.

We have, however, just uncovered an important

sociological aspect of the scientific enterprise: there is a

tendency to ‘jump on the bandwagon’. This results in the vast

majority of research going into one (even if the most promising)

direction—and this includes scientific staff, research grants,

observing time etc. The consequence is that new and unconventional

ideas have a hard time getting heard. Hopefully (and in the view of

this author, very likely), the bandwagon is heading in the right

direction. There are historical examples to the contrary, though—we

now know that Rome is not at the center of the cosmos, nor the

Earth, nor the Sun, nor the Milky Way, despite long epochs when the

vast majority of scientists were convinced of the veracity of these

ideas.

Footnotes

1

The total price tag on the HST project will

probably be on the order of 10 billion US dollars. This is

comparable to the total cost of the Large Hadron Collider and its

detectors. I am convinced that a particle physicist and an

astrophysicist can argue for hours which of the two investments is

more valuable for science—but how to compare the detection of the

Higgs boson with the manifold discoveries of HST! However, both,

the particle physicist and the astrophysicist, easily agree that

the two price tags are a bargain, when compared to an estimated

cost of 45 billion US dollar for the B2 stealth bomber

program.

2

However, there has been a fairly recent increase

in research activity on MOND. This was triggered mainly by the fact

that after many years of research, a theory called TeVeS (for

Tensor-Vector-Scalar field) was invented, containing General

Relativity, MOND and Newton’s law in the respective limits—though

at the cost of introducing three new arbitrary functions.