The origin and fate of the Universe is, for many researchers, the fundamental question. Many answers were provided over the ages, a few of them built over scientific observations and reasoning. During the last century important scientific theoretical and experimental breakthroughs occurred after Einstein’s proposal of the General Theory of Relativity in 1915, with precise and systematic measurements establishing the expansion of the Universe, the existence the cosmic microwave background, and the abundances of light elements in the Universe. The fate of the Universe can be predicted from its energy content—but, although the chemical composition of the Universe and the physical nature of its constituent matter have occupied scientists for centuries, we do not know yet this energy content well enough.

We are made of protons, neutrons, and electrons, combined into atoms in which most of the energy is concentrated in the nuclei (baryonic matter), and we know a few more particles (photons, neutrinos, ...) accounting for a limited fraction of the total energy of atoms. However, the motion of stars in galaxies as well as results about background radiation and the large-scale structure of the Universe (both will be discussed in the rest of this chapter) is inconsistent with the presently known laws of physics, unless we assume that a new form of matter exists. This matter is not visible, showing little or no interaction with photons—we call it “dark matter” . It is, however, important in the composition of the Universe, because its energy is a factor of five larger than the energy of baryonic matter.

Recently, the composition of the Universe has become even more puzzling, as observations imply an accelerated expansion. Such an acceleration can be explained by a new, unknown, form of energy—we call it “dark energy”—generating a repulsive gravitational force. Something is ripping the Universe apart.

The current view on the distribution of the total budget between these forms of energy is shown in Fig. 1.8. Note that we are facing a new Copernican revolution: we are not made of the same matter that most of the Universe is made of. Moreover, the Universe displays a global behavior difficult to explain, as we shall see in Sect. 8.1.1.

Today, at the beginning of the twenty-first century, the Big Bang model with a large fraction of dark matter (DM) and dark energy is widely accepted as “the standard model of cosmology,” but no one knows what the “dark” part really is, and thus the Universe and its ultimate fate remain basically unknown.

8.1 Experimental Cosmology

Wavelength shifts observed in spectra of galaxies depending on their distance. From J. Silk, “The Big Bang,” Times Books 2000

8.1.1 The Universe Is Expanding

68 km s

68 km s Mpc

Mpc is the so-called Hubble constant (we shall see that it is not at all

constant and can change during the history of the Universe) which

is often expressed as a function of a dimensionless parameter

h defined as

is the so-called Hubble constant (we shall see that it is not at all

constant and can change during the history of the Universe) which

is often expressed as a function of a dimensionless parameter

h defined as

A redshift occurs whenever  which is the case for the large

majority of galaxies. There are notable exceptions (

which is the case for the large

majority of galaxies. There are notable exceptions ( , a blueshift) as the one of M31, the

nearby Andromeda galaxy, explained by a large intrinsic velocity

(peculiar velocity) oriented toward

us.

, a blueshift) as the one of M31, the

nearby Andromeda galaxy, explained by a large intrinsic velocity

(peculiar velocity) oriented toward

us.

Wavelength shifts were first observed by the US astronomer James Keeler at the end of the nineteenth century in the spectrum of the light reflected by the rings of Saturn, and later on, at the beginning of twentieth century, by the US astronomer Vesto Slipher, in the spectral lines of several galaxies. In 1925 spectral lines had been measured for around 40 galaxies.

becomes

becomes

is not valid for high redshift

objects with z as high as 11

that have been observed in the last years—the list of the most

distant object comprises more than 100 objects with

is not valid for high redshift

objects with z as high as 11

that have been observed in the last years—the list of the most

distant object comprises more than 100 objects with  among galaxies (the most abundant

category), black holes, and even stars. On the other hand, high

redshift supernovae (typically

among galaxies (the most abundant

category), black holes, and even stars. On the other hand, high

redshift supernovae (typically  to 1) have been extensively studied.

From these studies an interpretation of the expansion based on

special relativity is clearly excluded: one has to invoke general

relativity.

to 1) have been extensively studied.

From these studies an interpretation of the expansion based on

special relativity is clearly excluded: one has to invoke general

relativity.In terms of general relativity (see

Sect. 8.2) the observed redshift is not due to any

movement of the cosmic objects but to the expansion of the proper

space between them. This expansion has no center: an observer at

any point of the Universe will see the expansion in the same way

with all objects in all directions receding with radial velocities

given by the same Hubble law and not limited by the speed of light

(in fact for  radial velocities are, in a large

range of cosmological models, higher than c): it is the distance scale in the

Universe that is changing.

radial velocities are, in a large

range of cosmological models, higher than c): it is the distance scale in the

Universe that is changing.

) distance between the objects

(

) distance between the objects

( that does not change with time

(comoving distance). Then

that does not change with time

(comoving distance). Then

The velocity–distance relation measured by Hubble (the original “Hubble plot”). From E. Hubble, Proceedings of the National Academy of Sciences 15 (1929) 168

(if

(if  the expansion slows down) defined as

the expansion slows down) defined as

However, in an expanding Universe the

computation of the distance is much more subtle. Various distance

measures are usually defined between two objects: in particular,

the proper distance  and the luminosity distance

and the luminosity distance  .

.

-

is defined as the length measured on

the spatial geodesic connecting the two objects at a fixed time (a

geodesic is defined to be a curve whose

tangent vectors remain parallel if they are transported along it.

Geodesics are (locally) the shortest path between points in space,

and describe locally the infinitesimal path of a test inertial

particle). It can be shown (see Ref. [F8.2]) that

for small z the usual linear Hubble law is recovered.

is defined as the length measured on

the spatial geodesic connecting the two objects at a fixed time (a

geodesic is defined to be a curve whose

tangent vectors remain parallel if they are transported along it.

Geodesics are (locally) the shortest path between points in space,

and describe locally the infinitesimal path of a test inertial

particle). It can be shown (see Ref. [F8.2]) that

for small z the usual linear Hubble law is recovered. (8.13)

(8.13) -

is defined as the distance that is

experimentally determined using a standard candle assuming a static

and Euclidean Universe as noted above:

is defined as the distance that is

experimentally determined using a standard candle assuming a static

and Euclidean Universe as noted above:

(8.14)

(8.14)

and

and  depends on the curvature of the

Universe (see Sect. 8.2.3). Even in a flat (Euclidean) Universe

(see Sect. 8.2.3 for a formal definition; for the moment,

we rely on an intuitive one, and think of flat space as a space in

which the sum of the internal angles of a triangle is always

depends on the curvature of the

Universe (see Sect. 8.2.3). Even in a flat (Euclidean) Universe

(see Sect. 8.2.3 for a formal definition; for the moment,

we rely on an intuitive one, and think of flat space as a space in

which the sum of the internal angles of a triangle is always

) the flux of light emitted by an

object with a redshift z and

received at Earth is attenuated by a factor

) the flux of light emitted by an

object with a redshift z and

received at Earth is attenuated by a factor  due to the dilation of time

(

due to the dilation of time

( ) and the increase of the photon’s

wavelength (

) and the increase of the photon’s

wavelength ( ). Then if the Universe was basically

flat

). Then if the Universe was basically

flat

![$$\begin{aligned} d_L={d}_p\left( 1+z\right) \simeq \frac{c}{H_0}z\left[ 1+\frac{ 1-q_0}{2}z\right] \, . \end{aligned}$$](/epubstore/A/A-D-Angelis/Introduction-To-Particle-And-Astroparticle-Physics/OEBPS/images/304327_2_En_8_Chapter/304327_2_En_8_Chapter_TeX_Equ15.png)

one needs to extend the range of

distances in the Hubble plot by a large amount. New and brighter

standard candles are needed.

one needs to extend the range of

distances in the Hubble plot by a large amount. New and brighter

standard candles are needed.8.1.2 Expansion Is Accelerating

Type Ia

supernovae have been revealed themselves as an optimal option to

extend the range of distances in the Hubble plot. Supernovae Ia

occur whenever, in a binary system formed by a white dwarf (a compact Earth-size stellar endproduct of

mass close to the solar mass) and another star (for instance a red

giant, a luminous giant star in a late

phase of stellar evolution), the white dwarf accretes matter from

its companion reaching a total critical mass of about 1.4 solar

masses. At this point a nuclear fusion reaction starts, leading to

a gigantic explosion (with a luminosity about  times larger than the brightest

Cepheids; see Fig. 8.3 for an artistic representation).

times larger than the brightest

Cepheids; see Fig. 8.3 for an artistic representation).

data leads to negative values of

data leads to negative values of

meaning that, contrary to what was

expected, the expansion of the Universe is nowadays accelerating.

meaning that, contrary to what was

expected, the expansion of the Universe is nowadays accelerating.

Artistic representation of the formation and explosion of a supernova Ia

(Image from A. Hardy, David A. Hardy/www.astroart.org)

Left: The “Hubble plot” obtained by the “High-z Supernova Search Team” and by the “Supernova Cosmology Project.” The lines represent the prediction of several models with different energy contents of the Universe (see Sect. 8.4). The best fit corresponds to an accelerating expansion scenario. From “Measuring Cosmology with Supernovae,” by Saul Perlmutter and Brian P. Schmidt; Lecture Notes in Physics 2003, Springer. Right: an updated version by the “Supernova Legacy Survey” and the “Sloan Digital Sky Survey” projects, M. Betoule et al. arXiv:1401.4064

by the simple formula:

by the simple formula:

is known as the angular diameter

distance. In a curved and/or expanding

Universe

is known as the angular diameter

distance. In a curved and/or expanding

Universe  does not coincide with the proper

(

does not coincide with the proper

( ) and the luminosity (

) and the luminosity ( ) distances defined above but it can

be shown (see Ref. [8.2]) that:

) distances defined above but it can

be shown (see Ref. [8.2]) that:

between pairs of galaxies is just the

excess probability that the two galaxies are at the distance

r and thus a sharp peak in

between pairs of galaxies is just the

excess probability that the two galaxies are at the distance

r and thus a sharp peak in

will correspond in its Fourier

transform to an oscillation spectrum with a well-defined

frequency.

will correspond in its Fourier

transform to an oscillation spectrum with a well-defined

frequency.8.1.2.1 Dark Energy

There is no

classical explanation for the accelerated expansion of the

Universe. A new form of energy is invoked, permeating the space and

exerting a negative pressure. This kind of energy can be described

in the general theory of relativity (see later) and associated,

e.g., to a “cosmological constant” term  ; from a physical point of view, it

corresponds to a “dark” energy component—and to the present

knowledge has the largest energy share in the Universe.

; from a physical point of view, it

corresponds to a “dark” energy component—and to the present

knowledge has the largest energy share in the Universe.

In Sect. 8.4 the current overall

best picture able to accommodate all present experimental results

(the so-called  CDM model) will be discussed.

CDM model) will be discussed.

8.1.3 Cosmic Microwave Background

In 1965 Penzias and Wilson,3 two radio astronomers working at Bell Laboratories in New Jersey, discovered by accident that the Universe is filled with a mysterious isotropic and constant microwave radiation corresponding to a blackbody temperature around 3 K.

cm while the spectrum peaks

around

cm while the spectrum peaks

around mm. To fully measure the density

spectrum it is necessary to go above the Earth’s atmosphere, which

absorbs wavelengths lower than

mm. To fully measure the density

spectrum it is necessary to go above the Earth’s atmosphere, which

absorbs wavelengths lower than  cm. These measurements were

eventually performed in several balloon and satellite experiments.

In particular, the Cosmic Background Explorer (COBE), launched in

1989, was the first to show that in the 0.1 to 5 mm range the

spectrum, after correction for the proper motion of the Earth, is

well described by the Planck blackbody formula

cm. These measurements were

eventually performed in several balloon and satellite experiments.

In particular, the Cosmic Background Explorer (COBE), launched in

1989, was the first to show that in the 0.1 to 5 mm range the

spectrum, after correction for the proper motion of the Earth, is

well described by the Planck blackbody formula

is the Boltzmann constant. Other

measurements at longer wavelengths confirmed that the cosmic

microwave background (CMB) spectrum is well described by the

spectrum of a single temperature blackbody (Fig. 8.5) with a mean

temperature of

is the Boltzmann constant. Other

measurements at longer wavelengths confirmed that the cosmic

microwave background (CMB) spectrum is well described by the

spectrum of a single temperature blackbody (Fig. 8.5) with a mean

temperature of

The CMB intensity plot as measured by COBE and other experiments

8.1.3.1 Recombination and Decoupling

In the Big Bang model the expanding Universe cools down going through successive stages of lower energy density (temperature) and more complex structures. Radiation materializes into pairs of particles and antiparticles, which, in turn, give origin to the existing objects and structures in the Universe (nuclei, atoms, planets, stars, galaxies, ...). In this context, the CMB is the electromagnetic radiation left over when electrons and protons combine to form neutral atoms (the, so-called, recombination phase). After this stage, the absence of charged matter allows photons to be basically free of interactions, and evolve independently in the expanding Universe (photon decoupling ).

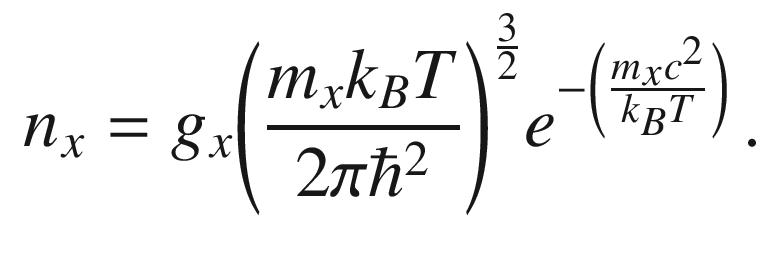

H as nonrelativistic particles) the

number density of electrons, protons, and hydrogen atoms may be

approximated by the Maxwell–Boltzmann distribution (see

Sect. 8.3.1)

H as nonrelativistic particles) the

number density of electrons, protons, and hydrogen atoms may be

approximated by the Maxwell–Boltzmann distribution (see

Sect. 8.3.1)

is a statistical factor accounting for

the spin (the subscript x

refers to each particle type).

is a statistical factor accounting for

the spin (the subscript x

refers to each particle type). can then be approximately modeled by

the Saha equation

can then be approximately modeled by

the Saha equation

for complete ionization, whereas,

for complete ionization, whereas,

when all protons are inside neutral

atoms),

when all protons are inside neutral

atoms),

, the Saha equation can be rewritten

as

, the Saha equation can be rewritten

as

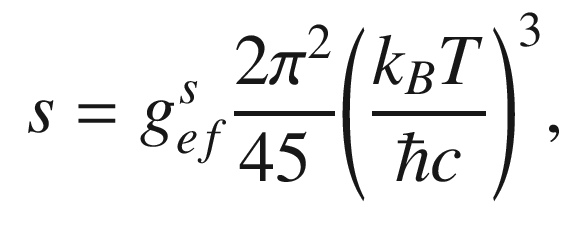

follows the usual blackbody

distribution corresponding, as we have seen before, to a photon

density number of:

follows the usual blackbody

distribution corresponding, as we have seen before, to a photon

density number of:

is the total number of baryons, which

in this simple approximation is defined as

is the total number of baryons, which

in this simple approximation is defined as

and

and  evolve independently both as

evolve independently both as

, where a(t)

is the scale factor of the Universe, see Sect. 8.1.1. Thus,

, where a(t)

is the scale factor of the Universe, see Sect. 8.1.1. Thus,  is basically a constant, which can be

measured at the present time through the measurement of the content

of light elements in the Universe (see Sect. 8.1.4):

is basically a constant, which can be

measured at the present time through the measurement of the content

of light elements in the Universe (see Sect. 8.1.4):

and T, and used to determine the

recombination temperature (assuming

and T, and used to determine the

recombination temperature (assuming

:

:

. For instance

. For instance  results into

results into

X as a function of z in the Saha equation. Time on the abscissa increases from left to right (as z decreases).

Adapted from B. Ryden, lectures at ICTP Trieste, 2006

. The entire CMB spectrum was expanded

by this factor, and then it can be estimated that recombination had

occurred (see Fig. 8.6) at

. The entire CMB spectrum was expanded

by this factor, and then it can be estimated that recombination had

occurred (see Fig. 8.6) at

equals the expansion rate of the

Universe (which is given by the Hubble parameter)

equals the expansion rate of the

Universe (which is given by the Hubble parameter)

and

and  are, respectively, the free electron

density number and the Thomson cross section.

are, respectively, the free electron

density number and the Thomson cross section. can be related to the fractional

ionization X(z) and the baryon density number

(

can be related to the fractional

ionization X(z) and the baryon density number

( ), the redshift at which the photon

decoupling occurs (

), the redshift at which the photon

decoupling occurs ( ) is given by

) is given by

is subtle. Both

is subtle. Both  and H evolve during the expansion (for

instance in a matter-dominated flat Universe, as it will be

discussed in Sect. 8.2,

and H evolve during the expansion (for

instance in a matter-dominated flat Universe, as it will be

discussed in Sect. 8.2,  and

and  ). Furthermore, the Saha equation is

not valid after recombination since electrons and photons are no

longer in thermal equilibrium. The exact value of

). Furthermore, the Saha equation is

not valid after recombination since electrons and photons are no

longer in thermal equilibrium. The exact value of  depends thus on the specific model

for the evolution of the Universe and the final result is of the

order of

depends thus on the specific model

for the evolution of the Universe and the final result is of the

order of

) when the Universe was still small

enough for neutral hydrogen formed at recombination to be ionized

by the radiation emitted by stars. Still, the scattering

probability of CMB photons during this epoch is small. To account

for it, the reionization optical depth parameter

) when the Universe was still small

enough for neutral hydrogen formed at recombination to be ionized

by the radiation emitted by stars. Still, the scattering

probability of CMB photons during this epoch is small. To account

for it, the reionization optical depth parameter  is

introduced, in terms of which the scattering probability is given

by

is

introduced, in terms of which the scattering probability is given

by

arcminutes.

arcminutes. , very close to

, very close to

the Universe is opaque to photons and

to be able to observe it other messengers, e.g., gravitational

waves, have to be studied. On the other hand, the measurement of

the primordial nucleosynthesis (Sect. 8.1.4) allows us to

indirectly test the Big Bang model at times well before the

recombination epoch.

the Universe is opaque to photons and

to be able to observe it other messengers, e.g., gravitational

waves, have to be studied. On the other hand, the measurement of

the primordial nucleosynthesis (Sect. 8.1.4) allows us to

indirectly test the Big Bang model at times well before the

recombination epoch.8.1.3.2 Temperature Fluctuations

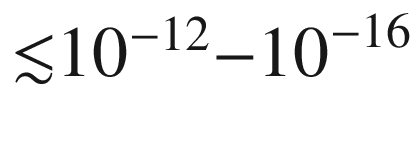

, the temperature fluctuations are of

the order of

, the temperature fluctuations are of

the order of  : the observed CMB spectrum is

remarkably isotropic.

: the observed CMB spectrum is

remarkably isotropic.

Sky map (in Galactic coordinates) of CMB temperatures measured by COBE after the subtraction of the emission from our Galaxy. A dipole component is clearly visible.

CMB temperature fluctuations sky map as measured by COBE after the subtraction of the dipole component and of the emission from our Galaxy.

, was confirmed and greatly improved

by the Wilkinson Microwave Anisotropy Probe WMAP, which obtained

full sky maps with a

, was confirmed and greatly improved

by the Wilkinson Microwave Anisotropy Probe WMAP, which obtained

full sky maps with a  angular resolution. The Planck

satellite delivered more recently sky maps with three times

improved resolution and ten times higher sensitivity

(Fig. 8.9), also covering a larger frequency range.

angular resolution. The Planck

satellite delivered more recently sky maps with three times

improved resolution and ten times higher sensitivity

(Fig. 8.9), also covering a larger frequency range.

CMB temperature fluctuations sky map as measured by the Planck mission after the subtraction of the dipole component and of the emission from our Galaxy.

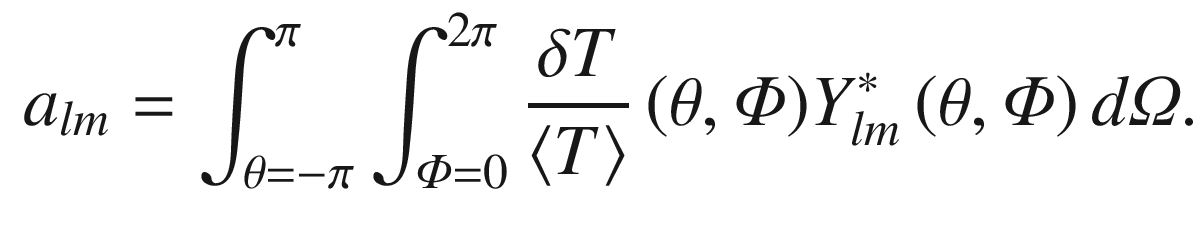

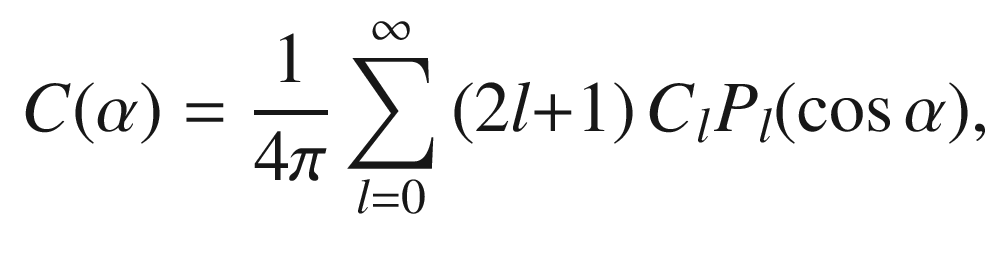

Once these maps are obtained it is possible to establish two-point correlations between any two spatial directions.

and

and  separated by an angle

separated by an angle  is defined as

is defined as

are the Legendre polynomials and the

are the Legendre polynomials and the

, the multipole moments, are given by

the variance of the harmonic coefficients

, the multipole moments, are given by

the variance of the harmonic coefficients  :

:

such that

such that

followed by several smaller peaks.

followed by several smaller peaks.

Temperature power spectrum from the Planck, WMAP, ACT, and SPT experiments. The abscissa is logarithmic for l less than 30, linear otherwise. The curve is the best-fit Planck model. From C. Patrignani et al. (Particle Data Group), Chin. Phys. C, 40, 100001 (2016)

The first peak at an angular scale of

defines the size of the “sound

horizon” at the time of last scattering (see

Sect. 8.1.4) and the other peaks (acoustic peaks)

are extremely sensitive to the specific

contents and evolution model of the Universe at that time. The

observation of very tiny fluctuations at large scales (much greater

than the horizon,

defines the size of the “sound

horizon” at the time of last scattering (see

Sect. 8.1.4) and the other peaks (acoustic peaks)

are extremely sensitive to the specific

contents and evolution model of the Universe at that time. The

observation of very tiny fluctuations at large scales (much greater

than the horizon,  ) leads to the hypothesis that the

Universe, to be casually connected, went through a very early stage

of exponential expansion, called inflation .

) leads to the hypothesis that the

Universe, to be casually connected, went through a very early stage

of exponential expansion, called inflation .

Anisotropies can also be found studying the polarization of CMB photons. Indeed at the recombination and reionization epochs the CMB may be partially polarized by Thomson scattering with electrons. It can be shown that linear polarization may be originated by quadrupole temperature anisotropies. In general the polarization pattern is decomposed in two orthogonal modes, respectively, called B-mode (curl-like) and E-mode (gradient-like). The E-mode comes from density fluctuations, while primordial gravitational waves are expected to display both polarization modes. Gravitational lensing of the CMB E-modes may also be a source of B-modes. E-modes were first measured in 2002 by the DASItelescope in Antarctica and later on the Planck collaboration published high-resolution maps of the CMB polarization over the full sky. The detection and the interpretation of B-modes are very challenging since the signals are tiny and foreground contaminations, as the emission by Galactic dust, are not always easy to estimate. The arrival angles of CMB photons are smeared, due to the microlensing effects, by dispersions that are function of the integrated mass distribution along the photon paths. It is possible, however, to deduce these dispersions statistically from the observed temperature angular power spectra and/or from polarized E- and B-mode fields. The precise measurement of these dispersions will give valuable information for the determination of the cosmological parameters. It will also help constraining parameters, such as the sum of the neutrino masses or the dark energy content, that are relevant for the growth of structures in the Universe, and evaluating contributions to the B-mode patterns from possible primordial gravity waves.

The detection of gravitational lensing was reported by several experiments such as the Atacama Cosmology Telescope, the South Pole Telescope, and the POLARBEAR experiment. The Planck collaboration has measured its effect with high significance using temperature and polarization data, establishing a map of the lensing potential.

Some of these aspects will be discussed briefly in Sect. 8.3, but a detailed discussion of the theoretical and experimental aspects of this fast-moving field is far beyond the scope of this book.

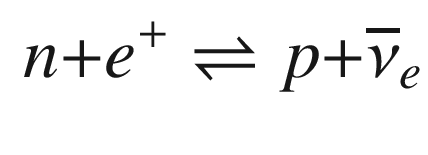

8.1.4 Primordial Nucleosynthesis

He,

He,  He,

He,  Li,

Li,  Li) is the third observational

“pillar” of the Big Bang model, after the Hubble expansion and the

CMB. As it was proposed, and first computed, by the Russian

American physicists Ralph Alpher and George Gamow in 1948, the

expanding Universe cools down, and when it reaches temperatures of

the order of the nuclei-binding energies per nucleon (

Li) is the third observational

“pillar” of the Big Bang model, after the Hubble expansion and the

CMB. As it was proposed, and first computed, by the Russian

American physicists Ralph Alpher and George Gamow in 1948, the

expanding Universe cools down, and when it reaches temperatures of

the order of the nuclei-binding energies per nucleon ( MeV) nucleosynthesis occurs if there

are enough protons and neutrons available. The main nuclear fusion

reactions are

MeV) nucleosynthesis occurs if there

are enough protons and neutrons available. The main nuclear fusion

reactions are

-

proton–neutron fusion:

-

deuterium–deuterium fusion:

-

other

formation reactions:

formation reactions:

-

and finally the lithium and beryllium formation reactions (there are no stable nuclei with

:

:

The absence of stable nuclei with

basically stops the primordial Big

Bang nucleosynthesis chain. Heavier nuclei are produced in stellar (up to Fe), or supernova

nucleosynthesis.5

basically stops the primordial Big

Bang nucleosynthesis chain. Heavier nuclei are produced in stellar (up to Fe), or supernova

nucleosynthesis.5

; if

; if  .

.

is greater than the expansion rate of

the Universe,

is greater than the expansion rate of

the Universe,

and H diminish during the expansion, the

former much faster than the latter. Indeed in a flat Universe

dominated by radiation (Sect. 8.2)

and H diminish during the expansion, the

former much faster than the latter. Indeed in a flat Universe

dominated by radiation (Sect. 8.2)

is the Fermi weak interaction

constant and

is the Fermi weak interaction

constant and  the number of degrees of freedom that

depends on the relativistic particles content of the Universe

(namely on the number of generations of light neutrinos

the number of degrees of freedom that

depends on the relativistic particles content of the Universe

(namely on the number of generations of light neutrinos

, which, in turn, allows to set a

limit on

, which, in turn, allows to set a

limit on  ).

). at which

at which

are a little below the MeV scale:

are a little below the MeV scale:

ratio will decrease slowly while all

the neutrons will not be bound inside nuclei, so that

ratio will decrease slowly while all

the neutrons will not be bound inside nuclei, so that

s is the neutron lifetime.

s is the neutron lifetime.

is quite small (

is quite small ( ) makes photodissociation of the

deuterium nuclei possible at temperatures lower than the blackbody

peak temperature

) makes photodissociation of the

deuterium nuclei possible at temperatures lower than the blackbody

peak temperature  (the Planck function has a long

tail). The relative number of free protons, free neutrons, and

deuterium nuclei can be expressed, using a Saha-like equation

(Sect. 8.1.3), as follows:

(the Planck function has a long

tail). The relative number of free protons, free neutrons, and

deuterium nuclei can be expressed, using a Saha-like equation

(Sect. 8.1.3), as follows:

as a function of

as a function of  and

and  and performing an order of magnitude

estimation, we obtain

and performing an order of magnitude

estimation, we obtain

by the Planck distribution

by the Planck distribution

gives a remarkably stable value of

temperature. In fact there is a sharp transition around

gives a remarkably stable value of

temperature. In fact there is a sharp transition around

MeV: above this value neutrons and

protons are basically free; below this value all neutrons are

substantially bound first inside D nuclei and finally inside

MeV: above this value neutrons and

protons are basically free; below this value all neutrons are

substantially bound first inside D nuclei and finally inside

nuclei, provided that there is enough

time before the fusion rate of nuclei becomes smaller than the

expansion rate of the Universe. Indeed, since the

nuclei, provided that there is enough

time before the fusion rate of nuclei becomes smaller than the

expansion rate of the Universe. Indeed, since the  binding energy per nucleon is much

higher than those of D,

binding energy per nucleon is much

higher than those of D,  H, and

H, and  , and since there are no stable nuclei

with

, and since there are no stable nuclei

with  , then

, then  is the favorite final state.

is the favorite final state.

The observed and predicted abundances of

, D,

, D,  , and

, and  Li. The bands show the 95% CL range.

Boxes represent the measured abundances. The narrow vertical band represents the constraints

at 95% CL on

Li. The bands show the 95% CL range.

Boxes represent the measured abundances. The narrow vertical band represents the constraints

at 95% CL on  (expressed in units of 10

(expressed in units of 10 ) from the CMB power spectrum analysis

while the wider is the Big Bang nucleosynthesis concordance range

From C. Patrignani et al. (Particle Data Group), Chin. Phys. C, 40,

100001 (2016)

) from the CMB power spectrum analysis

while the wider is the Big Bang nucleosynthesis concordance range

From C. Patrignani et al. (Particle Data Group), Chin. Phys. C, 40,

100001 (2016)

He,

He,  , is

defined usually as the fraction of mass density of

, is

defined usually as the fraction of mass density of  He nuclei,

He nuclei,  (

( He), over the total baryonic mass

density,

He), over the total baryonic mass

density,

, i.e., that

, i.e., that

,

,  .

. MeV and

MeV and  0.1 MeV

0.1 MeV

is in the range

is in the range

and around three quarters is made of

hydrogen. There are however small fractions of

and around three quarters is made of

hydrogen. There are however small fractions of

, and

, and  H that did not turn into

H that did not turn into  , and there are, thus, tiny fractions

of

, and there are, thus, tiny fractions

of  Li and

Li and  Be that could have formed after the

production of

Be that could have formed after the

production of  and before the dilution of the nuclei

due to the expansion of the Universe. Although their abundances are

quantitatively quite small, the comparison of the expected and

measured ratios are important because they are rather sensitive to

the ratio of baryons to photons,

and before the dilution of the nuclei

due to the expansion of the Universe. Although their abundances are

quantitatively quite small, the comparison of the expected and

measured ratios are important because they are rather sensitive to

the ratio of baryons to photons,  .

.In Fig. 8.11 the predicted

abundances of  ,

,

, and

, and  Li computed in the framework of the

standard model of Big Bang nucleosynthesis as a function of

Li computed in the framework of the

standard model of Big Bang nucleosynthesis as a function of

are compared with measurements (for

details see the Particle Data Book). An increase in

are compared with measurements (for

details see the Particle Data Book). An increase in  will increase slightly the deuterium

formation temperature

will increase slightly the deuterium

formation temperature  (there are less

(there are less  per baryon available for the

photodissociation of the deuterium), and therefore, there is more

time for the development of the chain fusion processes ending at

the formation of the

per baryon available for the

photodissociation of the deuterium), and therefore, there is more

time for the development of the chain fusion processes ending at

the formation of the  . Therefore, the fraction of

. Therefore, the fraction of

will increase slightly, in relative

terms, and the fraction of

will increase slightly, in relative

terms, and the fraction of  and

and  will decrease much more

significantly, again in relative terms. The evolution of the

fraction of

will decrease much more

significantly, again in relative terms. The evolution of the

fraction of  Li is, on the contrary, not

monotonous; it shows a minimum due to the fact that it is built up

from two processes that have a different behavior (the fusion of

Li is, on the contrary, not

monotonous; it shows a minimum due to the fact that it is built up

from two processes that have a different behavior (the fusion of

and

and  H is a decreasing function of

H is a decreasing function of

; the production via

; the production via  Be is an increasing function of

Be is an increasing function of

).

).

Apart from the measured value for the

fraction of  Li all the other measurements converge

to a common value of

Li all the other measurements converge

to a common value of  that is, within the uncertainties,

compatible with the value indirectly determined by the study of the

acoustic peaks in the CMB power spectrum (see

Sect. 8.4).

that is, within the uncertainties,

compatible with the value indirectly determined by the study of the

acoustic peaks in the CMB power spectrum (see

Sect. 8.4).

8.1.5 Astrophysical Evidence for Dark Matter

Evidence thatthe Newtonian physics applied to visible matter does not describe the dynamics of stars, galaxies, and galaxy clusters were well established in the twentieth century.

As a first approximation, one can estimate the mass of a galaxy based on its brightness: brighter galaxies contain more stars than dimmer galaxies. However, there are other ways to assess the total mass of a galaxy. In spiral galaxies, for example, stars rotate in quasi-circular orbits around the center. The rotational speed of peripheral stars depends, according to Newton’s law, on the total mass of the galaxy, and one has thus an independent measurement of this mass. Do these two methods give consistent results?

and for a spherical system

and for a spherical system

, where the constant

, where the constant  depends on the density profile and it

is generally of order one. Since a generic astronomical object is

not at rest with respect to the Sun (because of the expansion of

the Universe, of the peculiar motion, etc.), the application of the

virial theorem to Coma requires the velocity to be measured with

respect to its center-of-mass. Accordingly,

depends on the density profile and it

is generally of order one. Since a generic astronomical object is

not at rest with respect to the Sun (because of the expansion of

the Universe, of the peculiar motion, etc.), the application of the

virial theorem to Coma requires the velocity to be measured with

respect to its center-of-mass. Accordingly,  should be replaced by

should be replaced by  , where

, where  is the three-dimensional velocity

dispersion of the Coma galaxies. Further, since only the

line-of-sight velocity dispersion

is the three-dimensional velocity

dispersion of the Coma galaxies. Further, since only the

line-of-sight velocity dispersion  of the galaxies can be measured,

Zwicky made the simplest possible assumption that Coma galaxies are

isotropically distributed, so that

of the galaxies can be measured,

Zwicky made the simplest possible assumption that Coma galaxies are

isotropically distributed, so that  . As far as the potential energy is

concerned, Zwicky assumed that galaxies are uniformly distributed

inside Coma, which yields

. As far as the potential energy is

concerned, Zwicky assumed that galaxies are uniformly distributed

inside Coma, which yields  . Thus, the virial theorem now reads

. Thus, the virial theorem now reads

is the total mass of Coma in term of

galaxies (no intracluster gas was known at that time). Zwicky was

able to measure the line of sight of only seven galaxies of the

cluster; assuming them to be representative of the whole galaxy

population of Coma he found

is the total mass of Coma in term of

galaxies (no intracluster gas was known at that time). Zwicky was

able to measure the line of sight of only seven galaxies of the

cluster; assuming them to be representative of the whole galaxy

population of Coma he found  and

and  . Further, from the measured angular

diameter of Coma and its distance as derived from the Hubble law he

estimated

. Further, from the measured angular

diameter of Coma and its distance as derived from the Hubble law he

estimated  . Finally, Zwicky supposed that Coma

contains about N = 800 galaxies with mass

. Finally, Zwicky supposed that Coma

contains about N = 800 galaxies with mass  – which at that time was considered

typical for galaxies—thereby getting

– which at that time was considered

typical for galaxies—thereby getting  . Therefore Eq. (8.55) yields

. Therefore Eq. (8.55) yields  . Since

. Since  , in order to bring

, in order to bring  in agreement with

in agreement with  , Zwicky had to increase

, Zwicky had to increase  by a factor of about 200 (he wrote

400), thereby obtaining for the Coma galaxies

by a factor of about 200 (he wrote

400), thereby obtaining for the Coma galaxies  (he wrote

(he wrote  ). Thus, Zwicky ended up with the

conclusion that Coma galaxies have a mass about two orders of

magnitudes larger than expected: his explanation was that these

galaxies are totally dominated by dark matter.

). Thus, Zwicky ended up with the

conclusion that Coma galaxies have a mass about two orders of

magnitudes larger than expected: his explanation was that these

galaxies are totally dominated by dark matter.Despite this early evidence, it was only in the 1970s that scientists began to explore this discrepancy in a systematic way and that the existence of dark matter started to be quantified. It was realized that the discovery of dark matter would not only have solved the problem of the lack of mass in clusters of galaxies, but would also have had much more far-reaching consequences on our prediction of the evolution and fate of the Universe.

An important observational evidence of the need for dark matter was provided by the rotation curves of spiral galaxies—the Milky Way is one of them. Spiral galaxies contain a large population of stars placed on nearly circular orbits around the Galactic center. Astronomers have conducted observations of the orbital velocities of stars in the peripheral regions of a large number of spiral galaxies and found that the orbital speeds remain constant, contrary to the expected prediction of reduction at larger radii. The mass enclosed within the orbits radius must therefore gradually increase in regions even beyond the edge of the visible galaxy.

Later, another independent confirmation of Zwicky’s findings came from gravitational lensing. Lensing is the effect that bends the light coming from distant objects, due to large massive bodies in the path to the observer. As such it constitutes another method of measuring the total content of gravitational matter. The obtained mass-to-light ratios in distant clusters match the dynamical estimates of dark matter in those clusters.

8.1.5.1 How Is Dark Matter Distributed in Galaxies?

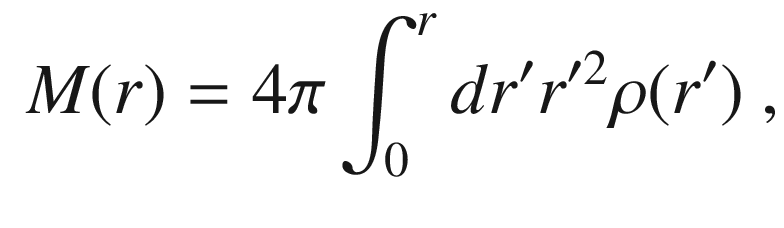

the mass of a star, we have

the mass of a star, we have

where M(r)

is the total mass inside the radius r of the orbit of the star:

where M(r)

is the total mass inside the radius r of the orbit of the star:

; Eq. 8.56 yields

; Eq. 8.56 yields  . This behavior is called Keplerian because it is identical to that

of the rotation velocity of the planets orbiting the Sun. Yet, the

observations of Rubin and collaborators showed that v(r)

rises close enough to the center, and then reaches a maximum and

stays constant as r increases,

failing to exhibit the expected Keplerian fall-off. According to

Eq. 8.56 the

observed behavior implies that in order to have

. This behavior is called Keplerian because it is identical to that

of the rotation velocity of the planets orbiting the Sun. Yet, the

observations of Rubin and collaborators showed that v(r)

rises close enough to the center, and then reaches a maximum and

stays constant as r increases,

failing to exhibit the expected Keplerian fall-off. According to

Eq. 8.56 the

observed behavior implies that in order to have  it is necessary that

it is necessary that  . But since

. But since

is the mass density, the conclusion

is that at large enough galactocentric distance the mass density

goes like

is the mass density, the conclusion

is that at large enough galactocentric distance the mass density

goes like  . In analogy with the behavior of a

self-gravitating isothermal gas sphere, the behavior is called

singular isothermal. As a

consequence, spiral galaxies turn out to be surrounded in first

approximation by a singular isothermal halo made of dark matter. In

order to get rid of the central singularity, it is often assumed

that the halo profile is pseudo-isothermal, assuming a density:

. In analogy with the behavior of a

self-gravitating isothermal gas sphere, the behavior is called

singular isothermal. As a

consequence, spiral galaxies turn out to be surrounded in first

approximation by a singular isothermal halo made of dark matter. In

order to get rid of the central singularity, it is often assumed

that the halo profile is pseudo-isothermal, assuming a density:

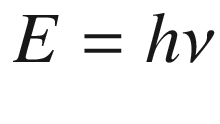

, too small to draw waterproof

conclusion when errors are taken into account. Luckily, the disks

of spirals also contain neutral atomic hydrogen (HI) clouds; like

stars they move on nearly circular orbits, but the gaseous disk

extends typically twice, and in some cases even more. According to

relativistic quantum mechanics, the nonrelativistic ground state of

hydrogen at

, too small to draw waterproof

conclusion when errors are taken into account. Luckily, the disks

of spirals also contain neutral atomic hydrogen (HI) clouds; like

stars they move on nearly circular orbits, but the gaseous disk

extends typically twice, and in some cases even more. According to

relativistic quantum mechanics, the nonrelativistic ground state of

hydrogen at  splits into a pair of levels,

depending on the relative orientation of

the spins of the proton and the electron; the energy splitting is

only

splits into a pair of levels,

depending on the relative orientation of

the spins of the proton and the electron; the energy splitting is

only  eV (hyperfine splitting). Both levels

are populated thanks to collisional excitation and interaction with

the CMB; thus, HI clouds can be detected by radio-telescopes since

photons emitted during the transition to the ground state have a

wavelength of about 21 cm. In 1985 Van Albada, Bahcall, Begeman and

Sancisi performed this measurement for the spiral NGC 3198, whose

gaseous disks is more extended than the stellar disk by a factor of

2.7, and could construct the rotation curve out to

eV (hyperfine splitting). Both levels

are populated thanks to collisional excitation and interaction with

the CMB; thus, HI clouds can be detected by radio-telescopes since

photons emitted during the transition to the ground state have a

wavelength of about 21 cm. In 1985 Van Albada, Bahcall, Begeman and

Sancisi performed this measurement for the spiral NGC 3198, whose

gaseous disks is more extended than the stellar disk by a factor of

2.7, and could construct the rotation curve out to  . They found that the flat behavior

persists, and this was regarded as a clear-cut evidence for dark

matter halos around spiral galaxies. Measurements now include a

large set of galaxies (Fig. 8.12), including the Milky Way

(Fig. 8.13).

. They found that the flat behavior

persists, and this was regarded as a clear-cut evidence for dark

matter halos around spiral galaxies. Measurements now include a

large set of galaxies (Fig. 8.12), including the Milky Way

(Fig. 8.13).

Rotation curve of the galaxy M33 (from Wikimedia Commons, public domain)

Rotation curve of the Milky Way (from http://abyss.uoregon.edu)

Profiles obtained in numerical

simulations of dark matter including baryons are steeper in the

center than those obtained from simulations with dark matter only.

The Navarro, Frenk, and White (NFW) profile, often used as a benchmark, follows a  distribution at the center. On the

contrary, the Einasto profile does not

follow a power law near the center of galaxies, is smoother at kpc

scales, and seems to fit better more recent numerical simulations.

A value of about 0.17 for the shape parameter

distribution at the center. On the

contrary, the Einasto profile does not

follow a power law near the center of galaxies, is smoother at kpc

scales, and seems to fit better more recent numerical simulations.

A value of about 0.17 for the shape parameter  in Eq. 8.59 is consistent with

present data and simulations. Moore and collaborators have

suggested profiles steeper than NFW.

in Eq. 8.59 is consistent with

present data and simulations. Moore and collaborators have

suggested profiles steeper than NFW.

![$$\begin{aligned} \begin{array}{rrcl} \mathrm{NFW}: &{} \rho _\mathrm{NFW}(r) &{} = &{} \displaystyle \rho _{s}\frac{r_{s}}{r}\left( 1+\frac{r}{r_{s}}\right) ^{-2} \\ \mathrm{Einasto}: &{} \rho _\mathrm{Einasto}(r) &{} = &{} \displaystyle \rho _{s}\exp \left\{ -\frac{2}{\alpha }\left[ \left( \frac{r}{r_{s}}\right) ^{\alpha }-1\right] \right\} \\ \mathrm{Moore}: &{} \rho _\mathrm{Moore}(r) &{} = &{} \displaystyle \rho _{s} \left( \frac{r_s}{r}\right) ^{1.16} \left( 1+\frac{r}{r_s}\right) ^{-1.84} . \end{array} \end{aligned}$$](/epubstore/A/A-D-Angelis/Introduction-To-Particle-And-Astroparticle-Physics/OEBPS/images/304327_2_En_8_Chapter/304327_2_En_8_Chapter_TeX_Equ59.png)

Comparison of the densities as a function of

the radius for DM profiles used in the literature, with values

adequate to fit the radial distribution of velocities in the halo

of the Milky Way. The curve EinastoB indicates an Einasto curve

with a different  parameter. From M. Cirelli et al.,

“PPPC 4 DM ID: A Poor Particle Physicist Cookbook for Dark Matter

Indirect Detection”, arXiv:1012.4515, JCAP 1103 (2011) 051

parameter. From M. Cirelli et al.,

“PPPC 4 DM ID: A Poor Particle Physicist Cookbook for Dark Matter

Indirect Detection”, arXiv:1012.4515, JCAP 1103 (2011) 051

–

– , to be compared with the

, to be compared with the  of our Galaxy. For these galaxies the

ratio between the estimate of the total mass M inferred from the velocity dispersion

(velocities of single stars are measured with an accuracy of a few

kilometers per second thanks to optical measurements) and the

luminous mass L, inferred from

the count of the number of stars, can be very large. The dwarf

spheroidal satellites of the Milky Way could become tidally

disrupted if they did not have enough dark matter. In addition

these objects are not far from us: a possible DM signal should not

be attenuated by distance dimming. Table 8.1 shows some

characteristics of dSph in the Milky Way; their position is shown

in Fig. 8.15.

of our Galaxy. For these galaxies the

ratio between the estimate of the total mass M inferred from the velocity dispersion

(velocities of single stars are measured with an accuracy of a few

kilometers per second thanks to optical measurements) and the

luminous mass L, inferred from

the count of the number of stars, can be very large. The dwarf

spheroidal satellites of the Milky Way could become tidally

disrupted if they did not have enough dark matter. In addition

these objects are not far from us: a possible DM signal should not

be attenuated by distance dimming. Table 8.1 shows some

characteristics of dSph in the Milky Way; their position is shown

in Fig. 8.15.

A list of dSph satellites of the Milky Way that may represent the best candidates for DM searches according to their distance from the Sun, luminosity, and inferred M / L ratio

|

dSph |

|

L

( |

M / L ratio |

|---|---|---|---|

|

Segue 1 |

23 |

0.3 |

>1000 |

|

UMa II |

32 |

2.8 |

1100 |

|

Willman 1 |

38 |

0.9 |

700 |

|

Coma Berenices |

44 |

2.6 |

450 |

|

UMi |

66 |

290 |

580 |

|

Sculptor |

79 |

2200 |

7 |

|

Draco |

82 |

260 |

320 |

|

Sextans |

86 |

500 |

90 |

|

Carina |

101 |

430 |

40 |

|

Fornax |

138 |

15500 |

10 |

The Local Group of galaxies around the Milky Way (from http://abyss.uoregon.edu/~js/ast123/lectures/lec11.html). The largest galaxies are the Milky Way, Andromeda, and M33, and have a spiral form. Most of the other galaxies are rather small and with a spheroidal form. These orbit closely the large galaxies, as is also the case of the irregular Magellanic Clouds, best visible in the Southern hemisphere, and located at a distance of about 120,000 ly, to be compared with the Milky Way radius of about 50,000 ly

The observations of the dynamics of galaxies and clusters of galaxies, however, are not the only astrophysical evidence of the presence of DM. Cosmological models for the formation of galaxies and clusters of galaxies indicate that these structures fail to form without DM.

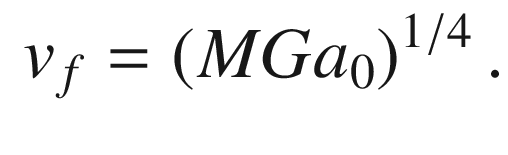

8.1.5.2 An Alternative Explanation: Modified Gravity

The dependence of  on the mass M(r)

on which the evidence for DM is based relies on the virial theorem,

stating that the kinetic energy is on average equal to the absolute

value of the total energy for a bound state, defining zero

potential energy at infinite distance. The departure from this

Newtonian prediction could also be related to a departure from

Newtonian gravity.

on the mass M(r)

on which the evidence for DM is based relies on the virial theorem,

stating that the kinetic energy is on average equal to the absolute

value of the total energy for a bound state, defining zero

potential energy at infinite distance. The departure from this

Newtonian prediction could also be related to a departure from

Newtonian gravity.

Alternative theories do not necessarily require dark matter, and replace it with a modified Newtonian gravitational dynamics. Notice that, in a historical perspective, deviations from expected gravitational dynamics already led to the discovery of previously unknown matter sources. Indeed, the planet Neptune was discovered following the prediction by Le Verrier in the 1840s of its position based on the detailed observation of the orbit of Uranus and Newtonian dynamics. In the late nineteenth century, the disturbances to the expected orbit of Neptune led to the discovery of Pluto. On the other hand, the precession of the perihelion of Mercury, which could not be quantitatively explained by Newtonian gravity, confirmed the prediction of general relativity—and thus a modified dynamics.

at small values of the acceleration,

and proposes the following modification:

at small values of the acceleration,

and proposes the following modification:

is positive, smooth, and

monotonically increasing; it is approximately equal to its argument

when the argument takes small values compared to unity (deep MOND

limit), but approaches unity when that argument is large.

is positive, smooth, and

monotonically increasing; it is approximately equal to its argument

when the argument takes small values compared to unity (deep MOND

limit), but approaches unity when that argument is large.

is a constant of the order of

is a constant of the order of

.

. and we can approximate

and we can approximate  . One has then

. One has then

given by

given by

.

.

The matter in the “bullet cluster” is shown in this composite image

(from http://apod.nasa.gov/apod/ap060824.html, credits: NASA/CXC/CfA/ M. Markevitch et al.). In this image depicting the collision of two clusters of galaxies, the bluish areas show the distributions of dark matter in the clusters, as obtained from gravitational lensing, and the red areas correspond to the hot X-ray emitting gases. The individual galaxies observed in the optical image data have a total mass much smaller than the mass in the gas, but the sum of these masses is far less than the mass of dark matter. The clear separation of dark matter and gas clouds is a direct evidence of the existence of dark matter

One could also consider the fact that galaxies may contain invisible matter of known nature, either baryons in a form which is hard to detect optically, or massive neutrinos—MOND reduces the amount of invisible matter needed to explain the observations.

8.1.6 Age of the Universe: A First Estimate

The age of the Universe is an old question. Has the Universe a finite age? Or is the Universe eternal and always equal to itself (steady state Universe)?

For sure the Universe must be older than

the oldest object that it contains and the first question has been

then: how old is the Earth? In the eleventh century, the Persian

astronomer Abu Rayhan al-Biruni had already realized that Earth

should have a finite age, but he just stated that the origin of

Earth was too far away to possibly measure it. In the nineteenth

century the first quantitative estimates finally came. From

considerations, both, on the formation of the geological layers,

and on the thermodynamics of the formation and cooling of Earth, it

was estimated that the age of the Earth should be of the order of

tens of millions of years. These estimates were in contradiction

with both, some religious beliefs, and Darwin’s theory of

evolution. Rev. James Ussher, an Irish Archbishop, published in

1650 a detailed calculation concluding that according to the Bible

“God created Heaven and Earth” some six thousand years ago, more

precisely “at the beginning of the night of October 23rd in the

year 710 of the Julian period”, which means 4004 B.C.. On the other

hand, tens or even a few hundred million years seemed to be a too

short time to allow for the slow evolution advocated by Darwin.

Only the discovery of radioactivity at the end of nineteenth

century provided precise clocks to date rocks and meteorite debris

with, and thus to allow for reliable estimates of the age of the

Earth. Surveys in the Hudson Bay in Canada found rocks with ages of

over four billion ( ) years. On the other hand

measurements on several meteorites, in particular on the Canyon

Diablo meteorite found in Arizona, USA, established dates of the

order of

) years. On the other hand

measurements on several meteorites, in particular on the Canyon

Diablo meteorite found in Arizona, USA, established dates of the

order of  years. Darwin had the time he

needed!

years. Darwin had the time he

needed!

The proportion of elements other than hydrogen and helium (defined as the metallicity) in a celestial object can be used as an indication of its age. After primordial nucleosynthesis (Sect. 8.1.4) the Universe was basically composed by hydrogen and helium. Thus the older (first) stars should have lower metallicity than the younger ones (for instance our Sun). The measurement of the age of low metallicity stars imposes, therefore, an important constraint on the age of the Universe. Oldest stars with a well-determined age found so far are, for instance, HE 1523-0901, a red giant at around 7500 light-years away from us, and HD 140283, denominated the Methuselah star, located around 190 light years away. The age of HE 1523-0901 was measured to be 13.2 Gyr, using mainly the decay of uranium and thorium. The age of HD 140283 was determined to be (14.5 ± 0.8) Gyr.

CDM model (see Sect. 8.4) the best-fit value,

taking into account the present knowledge of such parameters, is

CDM model (see Sect. 8.4) the best-fit value,

taking into account the present knowledge of such parameters, is

Finally, we stress that a Universe with

a finite age and in expansion will escape the nineteenth-century

Olbers’ Paradox: “How can the night be

dark?” This paradox relies on the argument that in an infinite

static Universe with uniform star density (as the Universe was

believed to be by most scientists until the mid of last century)

the night should be as bright as the day. In fact, the light coming

from a star is inversely proportional to the square of its

distance, but the number of stars in a shell at a distance between

r and  is proportional to the square of the

distance r. From this it seems

that any shell in the Universe should contribute the same amount

light. Apart from some too crude approximations (such as, not

taking into account the finite life of stars), redshift and the

finite size of the Universe solve the paradox.

is proportional to the square of the

distance r. From this it seems

that any shell in the Universe should contribute the same amount

light. Apart from some too crude approximations (such as, not

taking into account the finite life of stars), redshift and the

finite size of the Universe solve the paradox.

8.2 General Relativity

Special relativity, introduced in Chap. 2, states that one cannot distinguish on the basis of the laws of physics between two inertial frames moving at constant speed one with respect to the other. Experience tells that it is possible to distinguish between an inertial frame and an accelerated frame. Can the picture change if we include gravity?

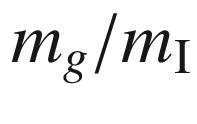

; this, in turn, is proportional to

the inertial mass

; this, in turn, is proportional to

the inertial mass  , that characterizes the body’s

inertia to be accelerated by a force. The net result is that the

local acceleration of a body, g, due to a gravitational field created

by a mass M at a distance

r, is proportional to the ratio

, that characterizes the body’s

inertia to be accelerated by a force. The net result is that the

local acceleration of a body, g, due to a gravitational field created

by a mass M at a distance

r, is proportional to the ratio

Thus if  were proportional to

were proportional to  the movement of a body in a

gravitational field would be independent of its mass and

composition. In fact the experiments of Galilei on inclined planes

showed the universality of the movement of rolling balls of

different compositions and weights. Such universality was also

found by measuring the period of pendulums with different weights

and compositions but identical lengths, first again by Galilei, and

later on with a much higher precision (better than 0.1%) by Newton.

Nowadays,

the movement of a body in a

gravitational field would be independent of its mass and

composition. In fact the experiments of Galilei on inclined planes

showed the universality of the movement of rolling balls of

different compositions and weights. Such universality was also

found by measuring the period of pendulums with different weights

and compositions but identical lengths, first again by Galilei, and

later on with a much higher precision (better than 0.1%) by Newton.

Nowadays,  is experimentally known to be

constant for all bodies, independent of their nature, mass, and

composition, up to a relative resolution of

is experimentally known to be

constant for all bodies, independent of their nature, mass, and

composition, up to a relative resolution of  . We then choose G in such a way that

. We then choose G in such a way that  . Space-based experiments, allowing

improved sensitivities up to

. Space-based experiments, allowing

improved sensitivities up to  on

on  , are planned for the next years.

, are planned for the next years.

8.2.1 Equivalence Principle

It is difficult to believe that such a precise equality is just a coincidence. This equality has been thus promoted to the level of a principle, named the weak equivalence principle , and it led Einstein to formulate the strong equivalence principle which is a fundamental postulate of General Relativity (GR) . Einstein stated that it is not possible to distinguish infinitesimal movements occurring in an inertial frame due to gravity (which are proportional to the gravitational mass), from movements occurring in an accelerated frame due to “fictitious” inertial forces (which are proportional to the inertial mass).

Scientists performing experiments in an accelerating spaceship moving with an upward acceleration g (left) obtain the same results as if they were on a planet with gravitational acceleration g (right). From A. Zimmerman Jones, D. Robbins, “String Theory For Dummies”, Wiley 2009

8.2.2 Light and Time in a Gravitational Field

In the same way, if an observer is inside a free-falling elevator, gravity is locally canceled out by the “fictitious” forces due to the acceleration of the frame. Free-falling frames are equivalent to inertial frames. A horizontal light beam in such a free falling elevator then moves in a straight line for an observer inside the elevator, but it curves down for an observer outside the elevator (Fig. 8.18). Light therefore curves in a gravitational field.

Trajectory of a light beam in an elevator freely falling seen by an observer inside (left) and outside (right): Icons made by Freepik from www.flaticon.com

Rocket on the left: Two clocks onboard an accelerating rocket. Two rockets on the right: Why the clock at the head appears to run faster than the clock at the tail

and the second flash travels the

shorter distance L

and the second flash travels the

shorter distance L , because the ship is accelerating and

has a higher speed at the time of the second flash. You can see,

then, that if the two flashes were emitted from clock A one second

apart, they would arrive at clock B with a separation somewhat less

than one second, since the second flash does not spend as much time

on the way. The same will also happen for all the later flashes. So

if you were sitting in the tail you would conclude that clock A was

running faster than clock B. If the rocket is at rest in a

gravitational field, the principle of equivalence guarantees that

the same thing happens. We have the relation

, because the ship is accelerating and

has a higher speed at the time of the second flash. You can see,

then, that if the two flashes were emitted from clock A one second

apart, they would arrive at clock B with a separation somewhat less

than one second, since the second flash does not spend as much time

on the way. The same will also happen for all the later flashes. So

if you were sitting in the tail you would conclude that clock A was

running faster than clock B. If the rocket is at rest in a

gravitational field, the principle of equivalence guarantees that

the same thing happens. We have the relation

:

:

8.2.3 Flat and Curved Spaces

Gravity in GR is no longer a force (whose sources are the masses) acting in a flat spacetime Universe. Gravity is embedded in the geometry of spacetime that is determined by the energy and momentum contents of the Universe.

Classical mechanics considers that we

are living in a Euclidean three-dimensional space (flat, i.e., with vanishing curvature),

where through each point outside a “straight” line (a geodesic)

there is one, and only one, straight line

parallel to the first one; the sum of the internal angles of a

triangle is  ; the circumference of a circle of

radius R is

; the circumference of a circle of

radius R is  , and so on. However, it is

interesting to consider what would happen if this were not the

case.

, and so on. However, it is

interesting to consider what would happen if this were not the

case.

; the circumference of a circle of

radius R is less than

; the circumference of a circle of

radius R is less than

. Alternatively, let us imagine that

we were living on a saddle, which has a negative curvature (the

surface can curve away from the tangent plane in two different

directions): then the sum of the angles of a triangle is less than

. Alternatively, let us imagine that

we were living on a saddle, which has a negative curvature (the

surface can curve away from the tangent plane in two different

directions): then the sum of the angles of a triangle is less than

; the perimeter of a circumference is

greater than

; the perimeter of a circumference is

greater than  , and so on. The three cases are

visualized in Fig. 8.20. The metric of the sphere and of the

saddle are not Euclidean.

, and so on. The three cases are

visualized in Fig. 8.20. The metric of the sphere and of the

saddle are not Euclidean.

2D surfaces with positive, negative, and null curvatures

(from http://thesimplephysicist.com,

2014 Bill

Halman/tdotwebcreations)

2014 Bill

Halman/tdotwebcreations)

8.2.3.1 2D Space

of the 2D flat surface is constant

and the geodesics are straight lines.

of the 2D flat surface is constant

and the geodesics are straight lines.

Distances on a sphere of radius a. From A. Tan et al. DOI:10.5772/50508

The maximum distance between two points

on the sphere is bounded by  : the two points are the extrema of a

half great circle.

: the two points are the extrema of a

half great circle.

term. It is not possible to cover the

entire sphere with one unique plane without somewhat distorting the

plane, although it is always possible to define locally at each

point one tangent plane. The geodesics are not straight lines; they

are indeed part of great circles, as it can be deduced directly

from the metrics and its derivatives.

term. It is not possible to cover the

entire sphere with one unique plane without somewhat distorting the

plane, although it is always possible to define locally at each

point one tangent plane. The geodesics are not straight lines; they

are indeed part of great circles, as it can be deduced directly

from the metrics and its derivatives. as

as

) curvature at any point of its

surface. However, the above expressions are valid both for the case

of negative (

) curvature at any point of its

surface. However, the above expressions are valid both for the case

of negative ( ) and null (

) and null ( ) curvature. In the case of a flat

surface, indeed, the usual expression in polar coordinates is

recovered:

) curvature. In the case of a flat

surface, indeed, the usual expression in polar coordinates is

recovered:

and, respectively,

and, respectively,  and

and  , is given by:

, is given by:

:

:

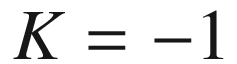

8.2.3.2 3D Space

are independent variables) leading

to:

are independent variables) leading

to:

![$$\begin{aligned} ds^2=\ a^2\left[ \frac{dr^2}{1-Kr^2}+r^2\ \left( d{\theta }^2+{\sin }^2\theta \ d{\varphi }^2\right) \right] \, . \end{aligned}$$](/epubstore/A/A-D-Angelis/Introduction-To-Particle-And-Astroparticle-Physics/OEBPS/images/304327_2_En_8_Chapter/304327_2_En_8_Chapter_TeX_Equ235.png)

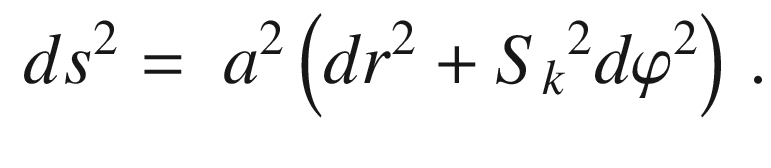

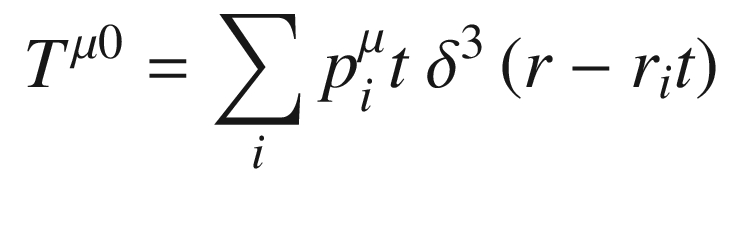

8.2.3.3 4D Spacetime

:

:

![$$\begin{aligned} ds^2=\ dt^2-\ a^2(t) \left[ \frac{dr^2}{1-Kr^2}+r^2\ \left( d{\theta }^2+{\sin }^2\theta \ d{\varphi }^2\right) \right] \end{aligned}$$](/epubstore/A/A-D-Angelis/Introduction-To-Particle-And-Astroparticle-Physics/OEBPS/images/304327_2_En_8_Chapter/304327_2_En_8_Chapter_TeX_Equ76.png)

, the FLRW metric can be written as

, the FLRW metric can be written as

introduced above as

introduced above as

) of the FLRW metric.

) of the FLRW metric.The geodesics in a 4D spacetime correspond to the extremal (maximum or minimum depending on the metric definition) world lines joining two events in spacetime and not to the 3D space paths between the two points. The geodesics are determined, as before, just from the metric and its derivatives.

8.2.4 Einstein’s Equations

and

and  are, respectively, the Einstein and

the Ricci tensors, which are built from the metric and its

derivatives;

are, respectively, the Einstein and

the Ricci tensors, which are built from the metric and its

derivatives;  is the Ricci scalar

is the Ricci scalar  and

and  is the energy–momentum tensor.

is the energy–momentum tensor.The energy and the momentum of the particles determine the geometry of the Universe which then determines the trajectories of the particles. Gravity is embedded in the geometry of spacetime. Time runs slower in the presence of gravitational fields.

Einstein’s equations are tensor equations and thus independent on the reference frame (the covariance of the physics laws is automatically ensured). They involve 4D symmetric tensors and represent in fact 10 independent nonlinear partial differential equations whose solutions, the metrics of spacetime, are in general difficult to sort out. However, in particular and relevant cases, exact or approximate solutions can be found. Examples are the Minkowski metric (empty Universe); the Schwarzschild metric (spacetime metric outside a noncharged spherically symmetric nonrotating massive object—see Sect. 8.2.8); the Kerr metric (a cylindrically symmetric vacuum solution); the FLRW metric (homogeneous and isotropic Universe—see Sect. 8.2.5).

constant in space and time, the

so-called “cosmological constant”):

constant in space and time, the

so-called “cosmological constant”):

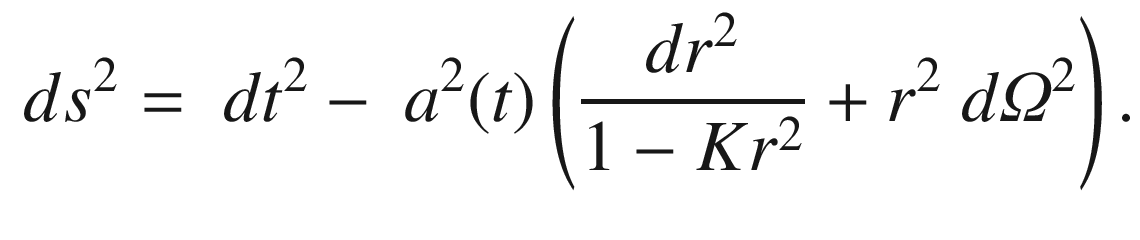

in a

Universe of free noninteracting particles with four-momentum

in a

Universe of free noninteracting particles with four-momentum

moving along trajectories

moving along trajectories

is defined as

is defined as

terms can be seen as “charges” and

the

terms can be seen as “charges” and

the  terms as “currents”, which then obey

a continuity equation ensuring energy–momentum conservation. In

general relativity local energy–momentum conservation generalizes

the corresponding results in special relativity,

terms as “currents”, which then obey

a continuity equation ensuring energy–momentum conservation. In

general relativity local energy–momentum conservation generalizes

the corresponding results in special relativity,

and pressure

and pressure

and accounts for the “kinetic energy”

of the fluid.

and accounts for the “kinetic energy”

of the fluid. and pressure

and pressure  the weak gravity field predicted by

Newton is given by

the weak gravity field predicted by

Newton is given by

are the Christoffel symbols given by

are the Christoffel symbols given by

, and then

, and then

8.2.5 The Friedmann–Lemaitre–Robertson–Walker Model (Friedmann Equations)

The present standard model of cosmology assumes the so-called cosmological principle, which in turn assumes a homogeneous and isotropic Universe at large scales. Homogeneity means that, in Einstein’s words, “all places in the Universe are alike” and isotropic just means that all directions are equivalent.

The FLRW metric discussed before (Sect. 8.2.3) embodies these symmetries leaving two independent functions, a(t) and K(t), which represent, respectively, the evolution of the scale and of the curvature of the Universe. The Russian physicist Alexander Friedmann in 1922, and independently the Belgian Georges Lemaitre in 1927, solved Einstein’s equations for such a metric leading to the famous Friedmann equations, which are still the starting point for the standard cosmological model, also known as the Friedmann–Lemaitre–Robertson–Walker (FLRW) model.

) as

) as