HUNTERS AND FARMERS

Although Cro-Magnon stone technology was a significant advance over the existing apparatus of the Neanderthals, life in the Old Stone Age was still based on hunting. Archaeologists divide the Stone Age into three phases, based on the style of stone tools used. It is not a hard and fast classification and is fuzzy at some of the boundaries, but it has endured as a useful way of referring to the main features of an archaeological site where the only evidence to go on is the artefacts that are found there. A trained archaeologist can tell at a glance whether he or she is dealing with an Old, Middle or New Stone Age site by the features of the stone tools and other artefacts found there and without needing to find any human bones to help.

The Old Stone Age, or Palaeolithic (from the Greek for old and stone) covers the time from the first appearance of stone tools about two million years ago up until the end of the last Ice Age about fifteen thousand years ago. There are huge differences between the crude hand axes that come from the beginning of this period and the delicately worked flint tools that are found at the end. To differentiate the various phases of this development, the Palaeolithic is divided into Lower, Middle and Upper phases. The Lower Palaeolithic roughly coincides with the time of Homo erectus, the Middle Palaeolithic corresponds approximately with the time of the Neanderthals, and the most recent, the Upper Palaeolithic, refers to the period beginning in Africa about a hundred thousand years ago when Homo sapiens finally arrived on the scene. In Europe, the Upper Palaeolithic doesn’t begin until the first Homo sapiens, the Cro-Magnons, appear with their advanced stone technology between forty and fifty thousand years ago.

After the end of the last Ice Age, the Middle Stone Age, or Mesolithic, takes us up to the beginnings of agriculture. The boundary between the Upper Palaeolithic and the Mesolithic is very indistinct. There is an increase in the sophistication of worked stone tools and characteristic styles of implements made from bone and antler. Many more sites are found around coasts. However, there is no entirely new stone technology on the scale of that which divides the Middle and Upper Palaeolithic. At the other end of the Mesolithic, though, the transition is dramatic. The New Stone Age or Neolithic is the age of farming, and it is associated with a whole new set of tools – sickles for cutting stands of wheat; stones for grinding the grain – and, almost always, the first evidence of pottery.

The Cro-Magnons of the European Upper Palaeolithic lived in small nomadic bands following the animals they hunted, shifting camp with the seasons. Although a vanishingly few people around the world still make a living like this, for most of us (certainly for most of you reading this book) the fundamental basis of life has changed dramatically. This is due to the one technical revolution which eclipses any refinements to the shape and form of stone tools in its importance in creating the modern world. That revolution is agriculture. Within the space of only ten thousand years, human life has changed beyond all recognition, and all of these changes can be traced to our gaining control of food production.

By ten thousand years ago, our hunter–gatherer ancestors had reached all but the most inaccessible parts of the world. North and South America had been reached from Siberia. Australia and New Guinea were settled after significant sea crossings, and all habitable parts of continental Africa and Europe were occupied. Only the Polynesian islands, Madagascar, Iceland and Greenland had yet to experience the hand of humans. Bands of ten to fifty people moved about the landscape, surviving on what meat could be hunted or scavenged and gathering the wild harvest of seasonal fruits, nuts and roots. Then, independently and at different times in at least nine different parts of the world, the domestication of wild crops and animals began in earnest. It started first in the Near East about ten thousand years ago and, within a few thousand years, new centres of agriculture were appearing both here and in what are now India, China, west Africa and Ethiopia, New Guinea, Central America and the eastern United States. This was not a sudden process but, once it had begun, it had an inexorable and irreversible influence on the trajectory of our species.

There has never been a completely satisfactory explanation of why agriculture began when it did and how it sprang up in different parts of the world during a period when there was no realistic possibility of contact between one group and another. This was a time when the climate was improving, though fitfully, after the extremes of the last Ice Age. It was becoming warmer and wetter. The movement of game animals became less predictable as rainfall patterns changed. Even so, none of these things in themselves explain the radical departure from life as a hunter to that of a farmer. Why hadn’t it happened before? There were several warm interludes between Ice Ages during the course of human evolution where the climate would have favoured such experimentation. What must have been lacking was the mind to experiment.

Whatever the reasons behind the invention of agriculture, there is no doubting its effect. First of all, numbers of humans began to increase. Very roughly, and with wide variations depending on the terrain, one hunter–gatherer needs the resources of 10 square kilometres of land to survive. If that area is used to grow crops or rear animals, its productivity can be increased by as much as fifty times. Gone is the necessity for seasonal movements to follow the game or wild food. Very gradually camps became permanent, then in time villages and towns grew up. Soon food production exceeded the human effort available to keep it going. It was no longer essential for everybody to work at it full-time; so some people could turn to other activities, becoming craftsmen, artists, mystics and various kinds of specialists.

But it was not all good news. The close proximity of domesticated animals and dense populations of humans in villages and towns led to the appearance of epidemics. Measles, tuberculosis and smallpox crossed the species barrier from cattle to humans; influenza, whooping cough and malaria spread from pigs, ducks and chickens. The same process continues today with AIDS and BSE/CJD. Resistance to these diseases slowly improved in exposed populations, and here they became gradually less serious. But when the pathogens encountered a population which had not previously been exposed to them, they exploded with all their initial fury. This pattern would be repeated throughout human history. The European settlement of North America which followed on from the voyage of Christopher Columbus in 1492 was made easier by the accidental (or sometimes deliberate) infection of native Americans by epidemic diseases, like smallpox, which killed millions.

The first nucleus of domestication that we know about appeared about eleven thousand years ago in the Near East, in what is known as the Fertile Crescent. This region takes in parts of modern-day Syria, Iraq, Turkey and Iran, and is drained by the headwaters of the Tigris and Euphrates rivers. Here or hereabouts the hunters first began to gather in and eat the seeds of wild grasses. They still depended on the migrating herds of antelope that criss-crossed the grasslands on their seasonal migrations, but the seeds were plentiful and easy to collect. This was not agriculture, just another aspect of gathering in the wild harvest. Inevitably some seeds were spilled, then germinated and grew up the following year. It was a small step from noticing this accidental reproduction to deliberate planting near to the camps, which had by then already become more or less permanent in that part of the world thanks to the local abundance of wild food. Over time, the plants which produced the heavier grains were deliberately selected, and the natural genetic variants that produced them increased in the gene pool. True domestication had begun.

The same process was repeated in other parts of the world at later times and with different crops: rice in China, sugar-cane and taro in New Guinea, teosinte (the wild ancestor of maize) in Central America, squash and sunflower in the eastern United States, beans in India, millet in Ethiopia and sorghum in west Africa. Not only wild plants, but wild animals too were recruited into a life of domestication. Sheep and goats in the Near East along with cattle, later separately domesticated in India and Africa; pigs in China, horses and yaks in central Asia, and llamas in the Andes of South America were all tamed into a life of service. Even though most species resisted the process – for example, no deer have, even now, been truly domesticated – the enslavement of wild animals and plants for food production was the catalyst that enabled Homo sapiens to overrun and dominate the earth.

But how was this accomplished? Was there a replacement of the hunter–gatherers by the farmers, just as the Neanderthals had been pushed aside by the technologically advanced Cro-Magnons? Or was it instead the idea of agriculture, rather than the farmers themselves, which spread from the Near East into Europe? This seemed like another case of rival theories that could be solved by genetics – so we set out to do just that.

By the summer of 1994, by which time I had secured the three-year research grant I needed to carry on, I had collected together several hundred DNA sequences from all over Europe, in addition to the samples we had acquired on our Welsh trip two years previously. Most of them had been collected by the research team, or through friends, as the opportunity arose. One friend of mine was engaged to a girl from the Basque country in Spain, so he surprised his future in-laws by arriving with a box of lancets and setting about pricking the fingers of friends and family alike. A German medical student who was spending the summer in my lab on another project went para-gliding in Bavaria and tucked the sampling kit into his rucksack. Other DNA samples came from like-minded colleagues in Germany and Denmark who sent small packages containing hair stuck to bits of sellotape. Hair roots are a good source of DNA, but they are fiddly to work with and a lot of people, especially blondes, have hair that breaks before the root comes out. And pulling out hair hurts.

Another year on, and by the early summer of 1995 a few papers were beginning to appear in the scientific literature on mitochondrial DNA, from places as far apart as Spain, Switzerland and Saudi Arabia. It is always a precondition of publication in scientific journals that you deposit the raw data, in this case the mitochondrial sequences, in a freely accessible database; so with the help of these reports we were able to build up our numbers of samples further. The papers themselves were not encouraging. Their statistical treatment of the data was largely limited by the computer programs available at the time to comparisons of one population average against another, and drawing those wretched population trees. Given this treatment, the populations looked very much like one another, and the authors inevitably concluded with pessimistic forecasts about the value of doing mitochondrial DNA work in Europe at all. Compared to the genetic dramas being revealed in Africa, where there were much bigger differences between the DNA sequences from different regions, Europe was starting to get a reputation for being dull and uninteresting. I didn’t think that at all. There was masses of variation. We rarely found two sequences the same. What did it matter if Africa was ‘more exciting’? We wanted to know about Europe, and I was sure we could.

When we had gathered all the European data together, we started by trying to fit the sequences into a scheme which would show their evolutionary relationship to one another. This had worked very well in Polynesia, where we saw the two very distinct clusters and went on to discover their different geographical origins. We soon found out that it was going to be much more difficult than that in Europe. When we plugged the data into a computer program that was designed to draw evolutionary trees from molecular sequences, the results were a nightmare. After thinking for a very long time, the computer produced thousands of apparently equally viable alternatives. It couldn’t decide on the true tree. It looked hopeless. This was a very low point. Without a proper evolutionary scheme that connected the European sequences, we were going to be forced to publish our results, the results of three years’ hard work and a lot of money, with only bland and, to me, pretty meaningless population comparisons that might conclude, say, that the Dutch were genetically more like the Germans than they were like the Spanish. Wow.

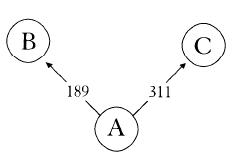

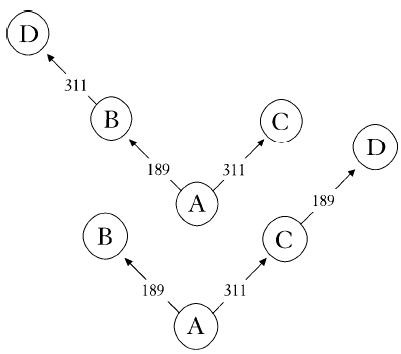

Before going down that miserable route – and we had to publish something soon to have any hope of securing yet more funding – we went back to the raw data. Instead of feeding it into the computer, we started drawing diagrams on bits of paper. Even then we couldn’t make any sense of the results. For instance, we would have four obviously related sequences but couldn’t connect them up in an unambiguous evolutionary scheme. Figure 4a shows an example. Sequence A was our reference sequence, sequence B had one mutation at position 189 and sequence C had one mutation at position 311. That’s easy enough. Sequence A came first, then a mutation at 189 led to sequence B. Similarly, a mutation at 311 turned sequence A into sequence C. No real problem there. No ambiguity. But what to do with a sequence like D, with mutations at 189 and 311? D could have come from B with a mutation at 311, or from C with a mutation at 189 (see Figure 4b). Either way it was obvious that the mutations, on which everything depended, were happening more than once. They were recurring at the same position. No wonder the computer was getting confused. Unable to resolve the ambiguity, it would draw out both trees. Another ambiguity somewhere else would force the program to draw out four trees. Another one and it had to produce eight trees, and so on. It was easy to see that it wouldn’t take many recurrent mutations in such a large set of data for the computer to produce hundreds or even thousands of alternative trees. How were we going to get over this? It looked as if we were really stuck. For the next week I would think I’d solved it, get out a piece of paper and start drawing, then realize whatever idea I’d had wasn’t going to work. Finally, I was sitting down in the coffee room one day doodling on napkins when the solution dawned on me. Don’t even try to come out with the perfect tree. Leave the ambiguities in there. Instead of trying to decide between them, just draw it as a square (Figure 4c). Freely admitting that I didn’t know which route led to D, I could leave it at that. Once I had unhooked myself from this dilemma, the rest was easy. I could relax. I was no longer seeking the perfect tree from thousands of alternatives. There was just one diagram, not a tree but a network, which certainly included some ambiguities but whose overall shape and structure was full of information.

Figure 4a

Figure 4b

Unknown to our team in Oxford, a German mathematician, Hans-Jürgen Bandelt, had been working on the theoretical treatment for just such a scenario. He was looking for the best way of incorporating DNA sequences with the sort of parallel mutations we were finding into an evolutionary diagram. He contacted us because he needed some real data to chew over, and we at once realized that we were both thinking along the same lines and solving the problem in the same way, drawing networks and not trees. The big difference was that Hans-Jürgen was able to apply a proper mathematical rigour to the process of constructing the networks, an advantage which was crucial to their acceptance as a respectable alternative to the traditional trees.

Figure 4c

With this important obstacle overcome, we could now concentrate on the picture that was slowly emerging from the European sequences. Whereas in Polynesia we saw two clearly differentiated clusters, in Europe the networks were sorting themselves out into several related clusters, groups of mitochondrial sequences that looked as though they belonged together. These were not so obviously distinct or so far apart as their Polynesian equivalents, in the sense that each cluster had fewer mutations separating it from the others. We had to look hard to make out the boundaries, and Martin Richards and I spent many hours trying to decide how they best fitted together. Were there five or six or seven clusters? It was hard to decide. At first we settled on six. We found out later that we had missed a clue which would have divided the biggest of the six clusters into two smaller ones to give us the seven clusters that we now know form the framework for the whole of Europe.

What mattered to us at the time was not so much precisely how many clusters there were, but that there were clusters at all. This was not the homogeneous and unstructured picture presented by the scientific articles which had been published by the summer of 1995, leading their authors to despair that anything useful could be found out about Europe from mitochondrial DNA. The clusters might have been hard to see, in fact impossible to distinguish without the clarifying summary of the network system, but there was no mistaking their presence. Now that we had our seven defined clusters we knew what we were dealing with, and could start looking at where they were found, and how old they were. Because we had a figure for the mutation rate of the mitochondrial control region we could combine this with the number of mutations we saw in each of the seven clusters to give us an idea of how long it had taken each cluster to evolve to its current stage of complexity. This had worked beautifully in Polynesia, where the two clusters we found had accumulated relatively few mutations within them for the simple reason that humans had only been in Polynesia for three to four thousand years at most. When we worked out the genetic dates for the two Polynesian clusters in the different island groups by factoring in the mutation rate, they corresponded very well to the settlement dates derived from the archaeology. The earliest islands to be settled, Samoa and Tonga in western Polynesia, had the most accumulated mutations within the clusters and a calculated genetic age of three thousand years, very similar to the archaeological age. Further east, the Cook Islands had fewer accumulated mutations and a younger date. Aotearoa (New Zealand), the last Polynesian island to be settled, had very few mutations within the clusters and the youngest date of all.

When we applied exactly the same procedure to the clusters in Europe we got a surprise. We had been expecting relatively young dates, though not as young as in Polynesia, because of the overwhelming influence of the agricultural migrations from the Near East in the last ten thousand years that were so prominent a feature in the textbooks. But six out of the seven clusters had genetic ages much older than ten thousand years. According to the version of Europe’s genetic history that we had all been brought up on, a population explosion in the Near East due to agriculture was followed by the slow but unstoppable advance of these same people into Europe, overwhelming the sparse population of hunter–gatherers. Surely, if this were true, the genetic dates for the mitochondrial clusters, or most of them at least, would have to be ten thousand years or less. But only one of the seven clusters fitted this description. The other six were much older. We rechecked our sequences. Had we scored too many mutations? No. We rechecked our calculations. They were fine. This was certainly a puzzle; but still we didn’t question the established dogma – until we looked at the Basques.

For reasons discussed in an earlier chapter, the Basques have long been considered the last survivors of the original hunter–gatherer population of Europe. Speaking a fundamentally different language and living in a part of Europe that was the last to embrace agriculture, the Basques have all the hallmarks of a unique population and they are proud of their distinctiveness. If the rest of Europe traced their ancestry back to the Near Eastern farmers, then surely the Basques, the last survivors of the age of the hunter–gatherers, should have a very different spectrum of mitochondrial sequences. We could expect to find clusters which we saw nowhere else; and we would expect not to find clusters that are common elsewhere. But when we pulled out the sequences from our Basque friends, they were anything but peculiar. They were just like all the other Europeans – with one noticeable exception: while they had representatives of all six of the old clusters, they had none at all of the seventh cluster with the much younger date. We got hold of some more Basque samples. The answer was the same. Rather than having very unusual sequences, the Basques were as European as any other Europeans. This could not be fitted into the scenario in which hunters were swept aside by an incoming tide of Neolithic farmers. If the Basques were the descendants of the original Palaeolithic hunter–gatherers, then so were most of the rest of us.

But what about the cluster that was absent from the Basques – the cluster that was distinguished from the rest by having a much younger date compatible with the Neolithic? When we plotted the places where we found this cluster on a map of Europe, we found a remarkable pattern. The six old clusters were to be found all over the continent, though some were commoner in one place than in others. The young cluster, on the other hand, had a very distinctive distribution. It split into two branches, each with a slightly different set of mutations. One branch headed up from the Balkans across the Hungarian plain and along the river valleys of central Europe to the Baltic Sea. The other was confined to the Mediterranean coast as far as Spain, then could be traced around the coast of Portugal and up the Atlantic coast to western Britain. These two genetic routes were exactly the same as had been followed by the very first farmers, according to the archaeology. Early farming sites in Europe are instantly recognizable by the type of pottery they contain, just as Lapita ceramics identify the early Polynesian sites in the Pacific. The push through central Europe from the Balkans, which began about seven and a half thousand years ago, is recorded by the presence at these early sites of a distinctive decorative style called Linear pottery, in which the vessels are incised with abstract geometric designs cut into the clay. The Linear pottery sites map out a slice of central Europe where, even today, one branch of the young cluster is still concentrated. In the central and western Mediterranean, early farming sites are identified by another style of pottery, called Impressed ware because the clay is marked with the impressions of objects, often shells, which have been pressed into the clay before firing. Once again, the concordant distribution of Impressed ware sites and the other branch of the young cluster stood out. This didn’t look like a coincidence. The two branches of the young mitochondrial cluster seemed to be tracing the footsteps of the very first farmers as they made their way into Europe.

There was one further piece of evidence we needed before we could be confident enough to announce our radical revision of European prehistory to the world. If the young cluster really were the faint echo of the early farmers, then it should be much commoner in the Near East than it is in Europe. At that time, the only sequences we had available from this region were from the Bedouin of Saudi Arabia. While only about 15–20 per cent of Europeans belonged to the young cluster – depending on which population was being studied – fully half of the Bedouin were in it.

We now had the evidence that most modern Europeans traced their ancestry back, far beyond the Neolithic, to the hunter–gatherers of the Palaeolithic, including the first Cro-Magnons that had replaced the Neanderthals. Certainly there had been new arrivals from the Middle East in the Neolithic; the correspondence between the geographical pattern of the young cluster and the archaeologically defined routes followed by the early farmers was good evidence of that. But it was not an overwhelming replacement. The young cluster makes up only 20 per cent of modern Europeans at the very most. We were ready to go public.